Async map with limited parallelism in JavaScript

Using generators and promises to control backpressure

📢Announcement: I’m introducing a new section to this newsletter for posts like this that dig into the actual coding craft. The reason is because a lot of what I do in terms of coding and architecture doesn’t fit into the other sections. As usual, you can disable receiving emails of this new section in your account setting.

This post shows a simple but clever technique to use JavaScript generators for controlling parallelism when mapping a huge array using async functions.

The result is a reusable pattern to process large arrays asynchronously while:

Keeping resource consumption fixed

Avoid external penalties like API rate limits

If you want to jump to the code, here is the example code (MIT license).

🤖🚫 No generative AI was used to create this content. This page is only intended for human consumption and is NOT allowed to be used for machine training including but not limited to LLMs. Copyright (C) 2025 Alex Ewerlöf. All rights reserved. (why?)

Problem

Array.map() is one of the most useful functions in JavaScript. It takes an array and runs each element through a function to get a new array:

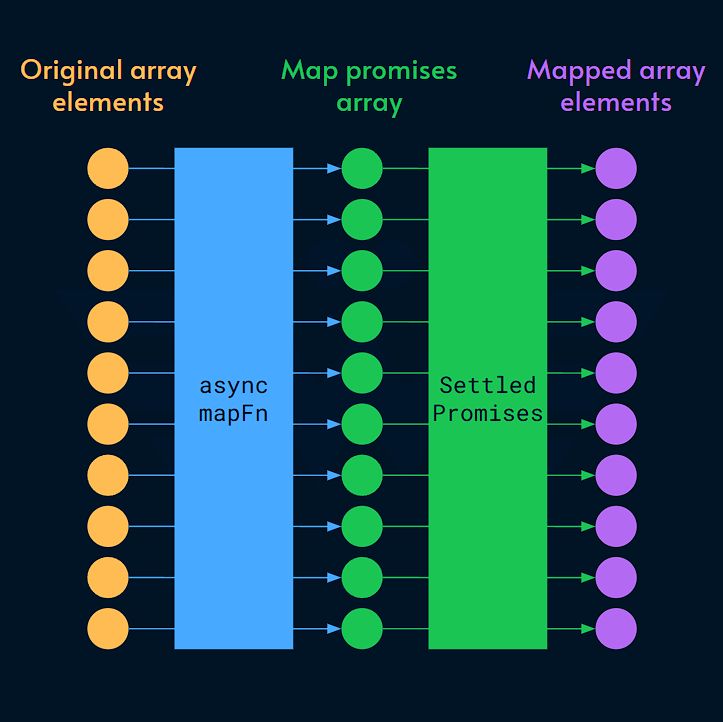

Unfortunately, this doesn’t work as neatly when the map function is async. In that case, you get an array of promises which need to be resolved to get the final results:

There are two ways to convert the array of promises to values:

Promise.all(arr.map(mapFn)):throws if theasync mapFnthrows when processing any of the array elements.Promise.allSettled(arr.map(mapFn)):runs theasync mapFnfor the whole array even if sometimes it throws.

The latter is better because we have no control over the execution order of those async map functions, so it’s better to wait till all elements are processed.

The output of the .allSettled() is an array of objects that tells whether the execution failed or not. According to MDN, it looks like this:

There’s a catch though: unlike a “normal” .map(), async map functions do not necessarily settle serially. JavaScript event loop typically executes async functions in multiple passes: any time there’s a wait (usually IO), JavaScript event loop proceeds to what it can execute next.

This introduces two problems:

The async functions take hold of resources like memory, files and TCP ports until they’re done.

If they try to access a rate-limited resource simultaneously (e.g., an API endpoint), some of them may fail under the spike in the load.

To visualize the process, here’s how synchronous map functions run over time:

As you can see, only one synchronous function is being executed at a time, which means the resource usage stays the same while processing all elements.

The situation is different with async functions:

The diagrams above show how a small array with only 10 elements takes 10x more memory and resources initially before they are released as the functions finish execution.

Both problems are much worse when the array has thousands or even millions of elements to be processed asynchronously.

Solution

Let’s recap the requirements:

We want a way to map an array using an asynchronous function

We want to limit the number of asynchronous functions that are running at a given time

We want to do it using minimal code

The solution should be compatible with how the plain JavaScript

Promise.allSettled(arr.map(mapFn))signature.

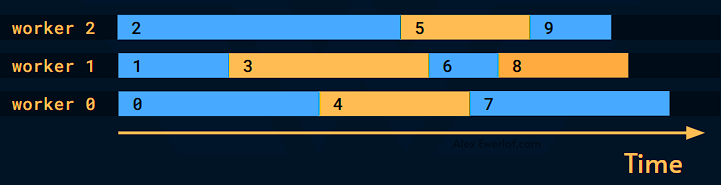

Essentially, we want only a few of those async map functions to be running at any given time. Here’s an example for when only 3 are running simultaneously:

You can see that the resource consumption is reduced regardless of the array size. Also, the execution is spread over time which makes it less likely to hit external limits like API rate-limits.

If you want to use a library, there are a couple of options:

eachLimit()function from the popularasyncmodule (docs)Bluebird.map()function from Bluebird with theconcurrencyoption set to a lower value than the array length (docs)

As we’ll see this problem doesn’t really require adding an entire dependency and having to deal with its costs: security, keeping it up to date, space, etc.

Generators

Generators came from Python to JavaScript. Although they’re not as popular, this is one of those cases where they come in handy.

Note that there are async generators too, but we don’t need them for this use case.

The algorithm I’ve come up with (in pre-AI era 😎) has 4 components:

runMapFn():A helper higher order function that runs the async map function and transfers its results to a format that is compatible withPromise.allSettled()worker():A couple of worker functions that fetch the parameters of async map function from a shared queue. The number of workers controls the level of parallelism we want. Note: naming is hard. Please don’t confuse this with JavaScript workers. As we’ll see this is much simpler!mapParams():A generator that acts as a shared queue between those workersmapAllSettled():A top level function that ties it all together: initialize the results array, creates the queue, and wait for the workers to finish. It has the same signature asPromise.allSettled(arr.map(mapFn)).

You can find the code in this Github repo. It’s written in functional Typescript to make it easier to understand what is going on.

I used Deno to run it but with minimal to no modifications you should be able to run it on your favorite runtime —even the browser.

Example

The repo has a simple example using JSON Placeholder API. That API has a /todos endpoint with 200 entries.

The source array is initialized to contain numbers from 0 to 200.

The async map function picks each element, creates a URL and fetches the todo object. After that the title property of each TODO is returned.

Here are the results for fetching 200 todo objects on my laptop over Wifi:

Using native

Promise.allSettled()took1032msUsing our

mapAllSettled()with3workers took2794ms

As expected, the limited parallel version took more time but the added benefits is:

At any given time, only 3 async map functions (the code above) were running

If the endpoint had some rate limiting in place, we would be much less likely to hit it

Below is a visualization of how 3 workers process elements of an array with 10 elements over time:

How does it work?

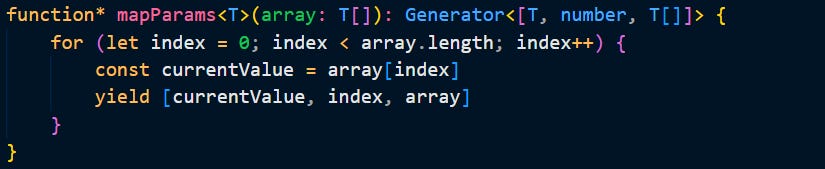

The trick is in the generator function that populates a queue shared between the workers:

As you can see, there’s no magic in the code. It just returns the arguments that should be passed to the map function according to JavaScript specification.

However, the way generators work is that the yield pauses the execution till the returned value is consumed. In our case, that happens in one of the worker functions.

The workers all get the reference to the generator and iterate through it using a for..of loop as long as there is something:

The map function runs in a try..catch clause to ensure compatibility with the Promise.allSettled() signature:

I left one tiny detail out:

The exported top level map function, creates an array to store the results.

A reference to this array is also passed to each worker.

The workers have access to the index of the source element they are processing, so after running the map function, they store the results in that array at the respective index.

The workers themselves are async functions and continue as long as there’s some work to do:

In the top-level function, all we have to do, is to do an await Promise.all(workers) to wait till all the workers are finished, and then return the results array:

The final code has a bit more lines to handle edge cases like when an empty array is passed, or no limit is set. There’s also a bunch of console logs to make it easier to follow what’s going on.

You can modify the code to your heart’s content and use it as you wish (MIT license).

My monetization strategy is to give away most content for free. However, these posts take anywhere from a few hours to a few days to draft, edit, research, illustrate, and publish. I pull these hours from my private time, vacation days and weekends.

The simplest way to support me is to like, subscribe and share this post.

If you really want to support me, you can consider a paid subscription. As a token of appreciation, you get access to the Pro-Tips sections and my online book Reliability Engineering Mindset. You can get 20% off via this link.

You can also invite your friends to gain free access.

And to those of you who support me already, thank you for sponsoring this content for the others. 🙌 If you have questions or feedback, or you want me to dig deeper into something, please let me know in the comments.