Cargo culting

How value dominance, shallow understanding, and imitation hurts software, systems, and organizations

Cargo culting refers to a phenomenon where people imitate the superficial aspects of a practice or process without understanding the underlying logic or reasons behind it.

Although the term originated from historical events, its usage expanded to other areas like software, systems, and organizations.

Here’s the story of how cargo cults came to be, with some examples from software and corporate world. In Pro-Tips we discuss actionable insights to prevent, spot, and dismantle cargo culting in your organization.

🤖🚫 Note: No AI is used to generate this content or images.

The historical phenomenon

During World War II, Japanese forces established bases in the remote islands of Melanesia.

This practice was later followed by the US and allies due to logistic and strategic importance of these islands.

To give you an idea how remote these islands were, here’s Tanna where multiple cargo cults emerged:

In a short period of time, the indigenous population were exposed to the largest war ever fought by technologically advanced nations.

Almost all equipment, supplies, clothing, medicine, and food were brought to the military bases as cargo.

The military personnel occasionally interacted with the indigenous peoples as guides and hosts. This exposed them to WHAT these cargos contained.

However, they were left completely in the dark about WHY and HOW of these cargo drops —possibly due to language barriers, racism, or security reasons at the heat of the war.

By the way, the reason “WHAT”, “HOW”, and “WHY” are all caps is because they’re a reference to Simon Sinek’s seminal TED talk.

After the war, the military bases were evacuated.

With that, the cargo stopped coming!

That didn’t stop indigenous peoples to engage in rituals hoping for the return of the cargos. They believed the cargos were sent from their ancestors, and the reason the “white man” captured them is because they were better at rituals.

So they got to work and imitated what they observed!

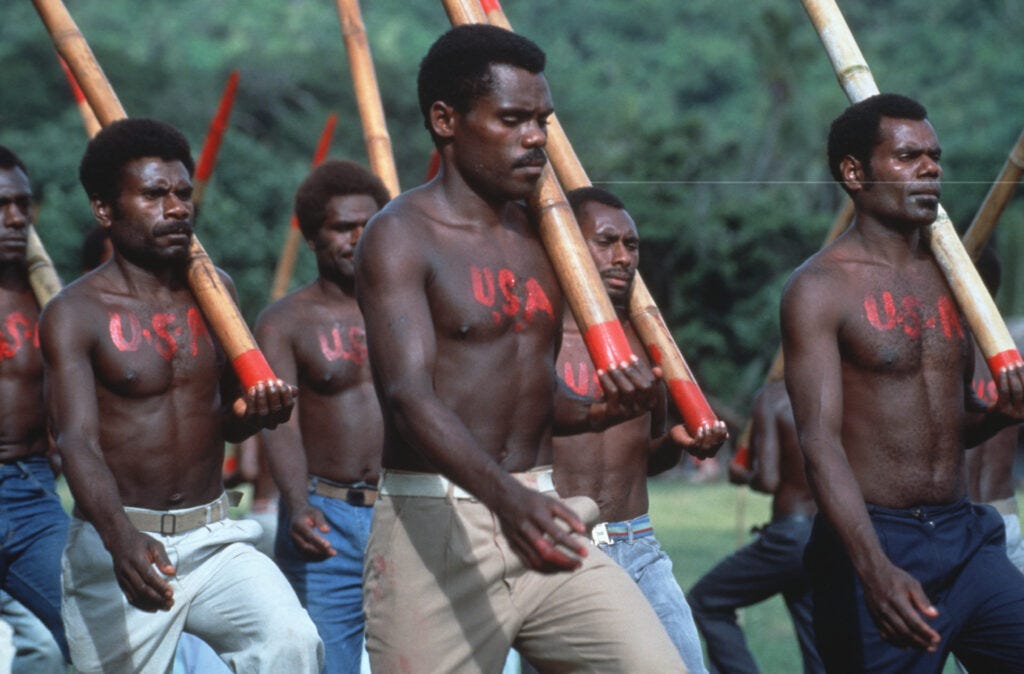

They marched…

…built airplanes…

…airport runways…

…complete with control tower.

There’s a short documentary on YouTube if you prefer video.

Beneath the funny imitations (symptom), there is a sad story (root causes and contributing factors).

Many indigenous societies of Melanesia had a big man cultural element. Big men were individuals who gained prestige through gift exchanges. The more wealth a man could distribute, the more people were in his debt, and the greater his renown.

When these cultures were exposed to seemingly unlimited supply of goods for exchange, they experienced "value dominance". That is, they were dominated by others in terms of their own (not the foreign) value system.

The language (and potentially security) barrier prevented their understanding of the truth.

Analysis

Cargo cults are very diverse but they all share these 3 elements:

Value dominance: judging others by our own value system. e.g., if someone from Google preaches something, it carries more weight because we all know how hard it is to get a job at Google, and most people dream of solving Google-level problems and earning Google-level salary/profit. This is halo effect bias where one’s association (company, country, race, etc.) gives them an unfair advantage.

Shallow understanding: critical thinking is replaced by superstition and magical thinking. e.g., “best practices” are often someone’s interpretation of why something worked at a certain time and environment. This interpretation is often exaggerated to make a point. Naïve folks extrapolate these best practices to their own time and environment and hope to get the same result. Spotify model is one example, but this phenomenon happens for more “logical” ideas like design patterns (that sometimes conflict with each other: DRY, WET, KISS, TDD, …)

Imitation: going through superficial motions, processes, and rituals. e.g., SAFe is an elaborated process with many roles and rituals. It promises to make large software teams “agile” but in practice it’s yet another waterfall process packaged for profit.

There are many factors that accelerate the effect of each element:

Value dominance: is boosted by name-dropping, slick presentation, and deliberate DSV (demonstration of high value).

Shallow understanding: is boosted by charismatic leaders, lazy practitioners, and a culture that has blocked feedback loops.

Imitation: is boosted by creating false hope, lack of education, and collectivism overriding individual identity

Put together, you get VSI:

Examples

Cargo culting can happen in various contexts, such as technology, business, or even education, where people adopt practices without fully comprehending their purpose and rationale.

As a reminder, these are the elements of cargo culting:

Value dominance

Shallow understanding

Imitation and rituals

Try to see if you can identify these elements in the examples below. 🙂

Cargo cult Programming

This was my entry to the term cargo culting, so it has to go first.

Cargo cult programming is a style of computer programming characterized by the ritual inclusion of code or program structures that serve no real purpose. —Wikipedia

Typical examples include:

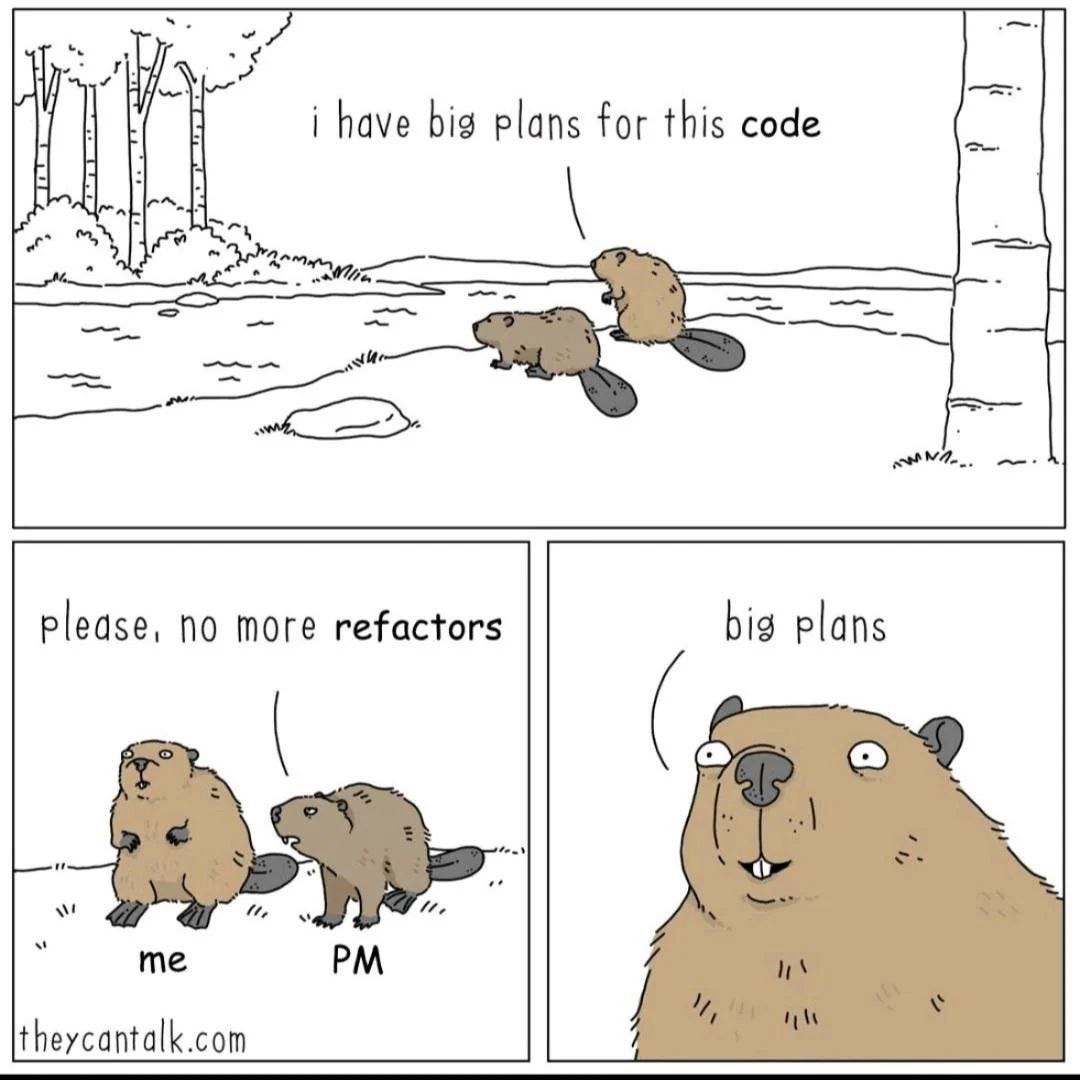

Someone returned pumped from an inspiring conference about functional programming. They rewrote major parts of their codebase to gain hands-on experience at the company’s expense. In the process they introduce new bugs while making the code base harder to maintain for others who didn’t go to the same conference or weren’t onboard with the idea.

Inspired by an awesome YouTube talk and some hobby project experimentation, the team lead is convinced that Rust is objectively a better programming language than Java/C#. So they convince the PM to give them 3 months to rewrite a “legacy” system in Rust.

The team spends 6 months trying to rewrite everything in Rust only to revert the decision because of the steep learning curve, poor ecosystem, and the tool “not being there yet” for their use case. This is a case of tech bet where the investment wasn’t justified from the start.

Attending a local meetup, a few team members are totally sold on the idea that all their tech debt will be paid if they implement SOLID design patterns in their code.

They get to work and eventually spent more time on SOLID rewriting than actually fixing the original tech debt. The added value of SOLID was miniscule in their CRUD-heavy application. New bugs replaced the old ones.

The original master minds left the company and the recruitment bar was higher for new recruits. Abusing design patterns when it’s not justified is not limited to SOLID. I’ve seen abuse of other concepts like KISS (keep it simple stupid), DRY (don’t repeat yourself), WET (write everything twice), MVC (model view controller), the Unix philosophy (do one thing and do it well), etc. These are not objectively bad ideas but can be abused to feed premature optimization.

Frustrated with Amazon EC2, the DevOpsy people in the team convinced the non-technical leadership to migrate everything to Kubernetes (circa 2018). Things went as you’d imagine:

A software-supported company wanted to up its software-game to cope with competition. Guided by McKinsey, they decided to throw money at the problem and hire from Big Tech.

The company opened an office in London and started gulping in talent from Facebook, Google, and Amazon. The ex-big-tech employees started cargo culting rituals from their ex-employer: tech committees, platform unification, tech radar, tech standards, etc. which rapidly burned the budget without making the company closer to any of its objectives.

Not only the employees and the office were expensive but these things take time and require cultural transformation. And some of these initiatives downright didn’t work because big tech has fundamentally different competition landscape, revenue model, influence, talent bar, and investment model.

In this particular case, the company broke in two halves after the unsuccessful investment, and the ex-big-tech employees quit one after another.

The London office was shut down.

Recap

Cargo cults emerge when we overestimate the value of ideas by our own measures. We need to ask about the trade-offs. When hiring from big tech, be mindful about who you’re hiring. Many of these folks didn’t initiate the great work but were merely a bystander or practitioner. Be careful about the name-dropping effect and authority bias.

Understanding WHAT (they do) is important but don’t stop there. Dig deeper to understand HOW (it works) and WHY (they do that).

As a social species, we’re vulnerable to imitation and rituals. Monkey see, monkey do. But what makes us different from monkeys is critical thinking. As it turns out, individual differences are quite good at flagging pointless exercises. The problem is when leadership overrides these hints and boosts fake alignment.

Many people accept cargo cults as an inevitable part of tech work. If that were true, I wouldn’t write this post! My goal is not to rant and document the status quo!

Pro-tips

My monetization strategy is to give away most content for free. However, these posts take anywhere from a few hours to a few days to draft, edit, research, illustrate, and publish. I pull these hours from my private time, vacation days and weekends.

You can support me by sparing a few bucks for a paid subscription. As a token of appreciation, you get access to the Pro-Tips sections as well as my online book Reliability Engineering Mindset. Right now, you can get 20% off via this link. You can also invite your friends to gain free access.

In the pro-tips, we discuss actionable advice to avoid, identify, and dismantle cargo cults in your organization.