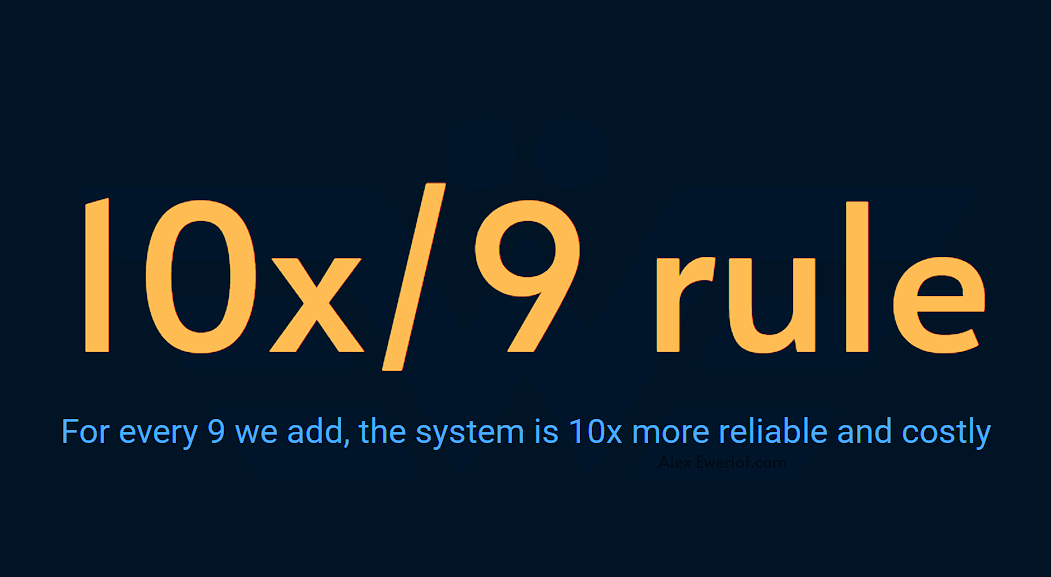

10x/9 Rule

For every 9 you add to SLO, you’re making the system 10x more reliable but also 10x more expensive.

When setting SLOs (service level objectives) there’s a rule of thumb that goes like this:

For every 9 you add to SLO, you’re making the system 10x more reliable but also 10x more expensive.

I call it the 10x/9 (read ten exes per nine). The first time I heard it, I was suspicious, but when looking at the math and reflecting on my experience, it surprisingly holds up.

Every 9 you add

Let’s first unpack the “9” part before going to “10”.

It is common for the SLO to be only composed of 9’s. For example: 99%, 99.9%… It doesn’t have to be that way (99.5% is a perfectly valid SLO. So is 93%!) but it’s common.

When the SLO only has 9’s, it can be abbreviated like this:

“2-nines” is another way to say 99%. Error budget = 1%

“3-nines” is 99.9%. Error budget = 0.1%

“4-nines” is 99.99%. Error budget = 0.01%

“5-nines” is 99.999%. Error budget = 0.001% (realm of highly available systems)

…

I haven’t worked with any system that has more than 5-nines but theoretically it’s possible. It’s just too expensive.

Which brings us to the 10x part.

10x more reliable

So, is the system really 10x more reliable?

Let’s start simple. Suppose we have a time-based availability SLI (also known as uptime).

This is such a common SLI that there are many sites dedicated to it. Let’s use one of the simpler ones: uptime.is. You can just punch in a number and see the error budget for different periods.

99% allows for 7h 14m 41s downtime (25,920 seconds)

99.9% allows for 43m 28s downtime (2,592 seconds)

99.99% allows for 4m 21s downtime (259 seconds)

99.999% allows for 26s downtime (26 seconds)

99.9999% allows for 2.6s downtime (2.6 seconds)

…

You get the idea. Every nine we add to the SLO, allows for 10x less downtime (i.e. more reliability).

Cost of reliability

What does it take to make the system more reliable? Sometimes, it’s just a small tweak but often it has a great cost. For example:

Refactoring: The code may need to be refactored to pay back tech debt or use a more reliable algorithm. Sometimes you hit the peak of what’s possible with a programming language and you may consider rewriting the critical parts of the service in another language. That doesn’t happen overnight, the planning, learning, refactoring, migration take time and incur actual development cost.

Reorg: if you’re not organized for reliability, there’s a cap to your reliability. Handovers increase the risk of miscommunication and broken ownership. Kebab vs Cake digs deeper into the organizational aspects.

Re-architecture: Sometimes, the system needs to be completely redesigned to handle the new NFRs (non-functional requirements) like scalability, reliability, and security. You may need to fallbacks or failover among other things.

Increase bandwidth: if the team is supposed to deliver features at the same pace while also improving reliability, it requires more bandwidth. For example: more people, more skilled people, less meetings, more efficient tools like AI.

Cadence: Change is the number one enemy of reliability! Every time you change the system, it is more likely to break. To increase reliability, you may have to reduce the pace of shipping features, which in turn can potentially hurt the company bottom line.

Education: to raise awareness about the importance of reliability, you may need to invest in educating your workforce (this is what I do by the way). The education is not limited to the leaf nodes of the organization. If you want real change, you need to bring leadership on the same page as the developers to speak the same language and prioritize reliability as a feature in the backlog.

Tooling: from AI assisted tools for coding and testing to observability, incident management, and on-call, there are tons of tools that can help build more reliable systems, but they come at a cost: to purchase, to learn, to configure, to integrate, to govern, etc.

Migration: you may have to migrate your workload to more capable systems or to other regions and have to deal with the complexity of replication, more attack surface, access control, etc.

Infra: The infrastructure may need more failover: beefier machines, more instances, more database replicas, etc. Both vertical scaling (e.g. more capable resources) and horizontal scaling (e.g. redundancy, CDN) cost money.

Change of vendors: “You get what you pay for”. A 3rd party vendor or dependency may not be able to meet your reliability requirements even at a higher price point. In these cases, you may change vendor or even build an in-house solution.

TTD: Time To Detect from when symptoms hit the consumer until someone in the right team knows about it). This may require automatic incident detection.

TTR: Time To Resolve from when symptoms hit the consumer until they’re resolved. Reducing the resolution time may require investing in more efficient release and roll-back process and tooling.

On-call: In many countries on-call requires an extra pay (in Sweden for example, you get paid ~40% more when you’re on-call regardless of if an incident happens or not, the pay is even higher over the weekend and the days before “off” days, you also get an extra paid day off). On-call rotation typically requires 5-8 people who know the system well enough to troubleshoot incidents) and pay them.

Automation: beyond a level, you cannot afford to have humans in the loop. For example, 5-nines allows only 26 seconds of downtime in a month! You cannot afford to have a human in the loop. In 26 seconds, you need to identify the problem, page someone in the middle of the night, wake them up, have them question their career choices, open the laptop, try to find the root cause, create a fix and ship to production. Not possible! This is the real of high availability systems where you need automatic error detection and automatic error recovery.

Process: There’s always a good reason for the process and it’s never about enabling human ingenuity but rather about protecting against human error, bias, slowness, etc. Process can add consistency and reliability at the cost of friction: QA (quality assurance) acting as gatekeepers, Platform team acting as babysitter, management acting as fire-fighters. Before you know it, this extra friction may lead to your most skilled people leaving the door. Make sure to add the process deliberately where the cost of friction is justified by the risks it mitigates. Keep it light and revisit regularly to improve productivity at an optimum level.

Does adding a 9 really make it 10x more expensive? Unlike downtime, the math for cost is not as straightforward and depends on the type of product, technology, architecture, personnel and many other factors.

But one thing is clear: higher reliability is expensive. The cost needs to be weighed against the business objectives and justified by the margin you’re making from running a service.

As Alex Hidalgo states in his book Implementing Service Level Objectives:

Not only is being perfect all the time impossible, but trying to be so becomes incredibly expensive very quickly. The resources needed to edge ever closer to 100% reliability grow with a curve that is steeper than linear. Since achieving 100% perfection is impossible, you can spend infinite resources and never get there.

In practice, however, there are some hurdles. The biggest one is that senior leaders often think they should be driving their teams toward perfection (100% customer satisfaction, zero downtime, and so forth). In my experience, this is the biggest mental hill to get senior management over.

I use this meme when motivating teams to measure the right thing and commit to a reasonable objective:

It’s a joke for you, but for me it’s a memory 😄

Now it’s time to tell that story.

Story

A few years ago, I joined the direct-to-consumer division of a media company. It was basically similar to Netflix but the content was owned by the American media giant. Before I joined, the product had severe reliability issues. Going through the app reviews and user feedback, one theme was dominant: the users loved the content but hated the digital platform.

They had a reliability issue.

So, they did what any good American enterprise at their scale would do: throw money at the problem 💸💸💸

The solution? Hire SREs. That’s how I came in.

When I heard that the CTO wants 5-nines, I giggled (reminder: the service can only fail 26s in a month, and we’re talking a Netflix-type product, not some airport control tower, bank, or hospital information system). 😄

And the entire engineering org was a fraction of Netflix. But Netflix was also in the game for a long time and have been actively working with reliability.

Then it occurred to me: we have a hard uphill battle. But how could we lower his expectations?

How we did that is in the “Pro tips”. These posts take anything from a few hours to days. The main chunk of the article is available for free. 🔓 For those who spare a few bucks, the “pro tips” unlocked as a token of appreciation (Right now, you can get 20% off via this link). And for those who choose not to subscribe, it is fine too. I appreciate your time reading this far and hopefully you share it withing your circles to inspire others. 🙏You can also follow me on LinkedIn where I share useful tips about technical leadership and growth mindset.