2 years ago, I wrote about the flaws of technical committees and why they are destined to fail.

But what tool do I use instead?

Short answer: ephemeral task force.

Ephemeral Task Force (ETF) is a selected group of people with cross-functional knowledge, mandate, and responsibility who are assembled for a specific delivery with a clear end in mind. The group is dismantle after the objective is accomplished.

This article convers:

Purpose of ephemeral taskforce and when it’s the right tool for technical leadership.

Comparison to technical committee.

Examples of how I use it to deliver impact across a large organization.

Pro tips: common pitfalls and remedies (the only paywalled section)

🤖🚫 Note: NO generative AI was used to create this content. This page is only intended for human consumption and is NOT allowed to be used for machine training including but not limited to LLMs. (why?)

Purpose of ephemeral taskforce

Ephemeral taskforce is a tool in the senior technical leadership toolbox.

It is suitable for initiatives that span across the org and require more bandwidth than a single person can deliver —hence the need to collaborate.

If it one would do, ETF is an overkill. So is the technical committee.

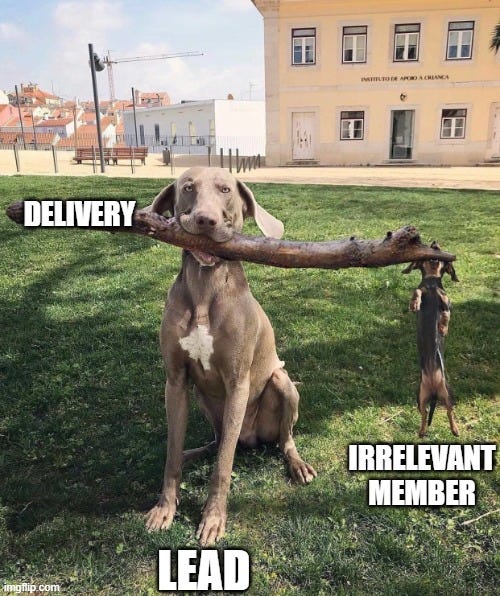

Unlike a typical leader/follower setup (e.g. one Staff Engineer leading a migration across multiple teams), ETF involves multiple leaders, each owning a piece of the execution. In this context, ownership refers to knowledge, mandate, and responsibility.

ETF is a temporary assembly of technical leaders and engineers. For example:

Implementing new regulations like European Accessibility Act (EAA) or GDPR compliance concern across the product landscape

Implementing a new business model that requires significant mutation of existing architecture

These cases often require a higher execution bandwidth and broader knowledge than one person can deliver in an acceptable time.

For example, it doesn’t help to have a solid understanding of GDPR, it is also important to know how user data is stored in the database, how it is transferred between services, how the payments are processed, logs are stored, UI is tracked, etc.

That’s too much knowledge.

Same is true about the mandate and responsibility where the required ownership is not consolidated in one person, but a group.

That’s why a selected group of individuals are assembled in a virtual team for a concrete mission.

If the initiative falls within the ownership boundaries of one team in the current org chart, there’s no reason to assemble a taskforce.

Similarly, if the nature of the delivery calls for an ongoing engagement without an end-date, then reorganization is a better tool than ETF. A thoughtful reorg can facilitate the initial delivery and continuous evolution.

To recap, ETF is composed of multiple people with the required ownership to deliver an impact in a set time frame.

Ideally the ephemeral taskforce is composed of:

A TPM (technical program manager) and/or a Staff or Principal who owns the high level impact

Individual contributors, SMEs (subject matter experts), and product managers across the org who:

Have the required mandate to make changes to systems that are owned by their home team

Possess the know-how and technical hard skills required to execute the tasks as the discovery process uncovers new aspects to the mission. Here, a can-do and positive attitude trumps having ninja-level skills in the most obvious technical aspect of the mission.

Relate to the problem being solved (maybe it’s a problem they have in their team) and feel the responsibility and motivation to solve that problem for their team and others

Typically a written strategy document can build clarity and alignment across this diverse group.

Comparison to technical committee

Let’s quickly go through the major flaws with technical committees (TC) and examine how ephemeral taskforce (ETF) addresses them:

Knowledge

❌ Technical committees tend to recycle the same brains for all sorts of decisions and initiatives.

✅ ETF bring the best people with the right skillsets and subject matter expertise to execute.

Mandate

❌ TC is typically a symptom of poor engineering management causing engineers to take the matter to their hand. TC acts as a self-appointed parliament. Formally, management has the mandate but due to lack of engineering literacy, they delegate to whoever runs the TC (we have covered this anti-pattern previously).

✅ Ephemeral taskforces are typically composed of a selected group of people by the engineering leadership with a clear mandate to execute a specific mission. The clear mission often justifies collaboration, so the formal mandate is less likely to be [ab]used.

Responsibility

❌ Technical committees typically turn to echo chambers with “us vs them” narratives. The lack of delivery and meaningful impact is often blamed on “others” who aren’t in the room.

✅ Ephemeral taskforces have the owners of the problem and solution with clear expectations and responsibility in the room. There are no others because everyone who is connected to the mission is either part of ETF or directly connected to it.

Productivity

❌ Technical committees tend to be larger and be composed of higher ranking engineers who meet weekly or biweekly indefinitely. This makes them too expensive for what they deliver because the delivery is often limited to manifestos and playbooks that aren’t connected to the reality of the engineers’ day-to-day work.

✅ Ephemeral taskforce is created out of necessity to execute at scale with a clear delivery in mind. ETF is often much smaller than TC due to razor sharp focus. The group dismantles after the objective is reached.

Story

A few years ago, I joined a company that suffered from poor delivery speed and unreliable technical solutions.

Each release was treated like a novel event worthy of rituals, manual testing, heavy process, gate keeping, and strict governance.

Despite that, the incidents were rampant, took too long to spot and resolve, and had an unreasonably high impact on business metrics.

This was a few years after DORA metrics were a hot topic. The leadership asked if I could help the org measure DORA metrics. Because it was considered “best practice”!

DORA stands for DevOps Research and Assessment. At the time, its focus was to measure and improve these metrics:

Deployment Frequency (DF): how often a team deploys code to prod.

Lead Time for Changes (LTC): how long it takes from commit to prod.

Change Failure Rate (CFR): ratio of deployments that break prod.

Mean Time to Recovery (MTTR): average time prod is broken.

Fun fact! DORA also stands for the new EU regulation called Digital Operational Resilience Act! 😄That one is concerned with digital resilience of financial entities to withstand, respond to, and recover from disruptions.

It is debatable whether DORA was the right tool, but I was too new to the org to push back with a reasonable alternative. I saw it as a learning opportunity to both get to know the org better and learn from implementing DORA at scale.

Soon after initial self-onboarding I realized that the task is much larger than I can deliver on my own in a timely manner.

It involved:

Identifying all the moving parts. Not a single person knew the entire tech landscape. Sure, some of the big parts were known but no body knew how many services we have and where do they run. This is the type of problem that an IDP (internal developer portal) like Backstage or Compass solve but we had nothing! So I had to start by crowdsourcing that data in a Notion Database!

Setting up a destination for the data, ideally with graphing feature. Observability tools are usually an excellent choice for this type of time-series. Unfortunately, the observability tooling landscape was fragmented and many services simply missed it altogether!

Tweaking CI/CD pipelines and SCM (source code management) tools like Github, Bitbucket, Azure DevOps (yeah we had all of them!) to get the f**ing DORA metrics.

Introducing the org to DORA metrics, their purpose and tune it to WIIFM (what’s in it for me) while simultaneously talking to leadership to control their urges to weaponize these metrics! I had talks, write-ups, a dedicated Slack channel and even video recordings to spread that knowledge but to this day I think there are some corners of the org that I failed to penetrate.

It was a big task and if I worked on it alone, by the time I was done, the whole thing would be rewritten in Gemxy (a hypothetical programming language from 2052)!

Fortunately, I had a good relationship with the director of platform engineering. He dedicated a few SREs to this task and I found a team who volunteered to be the guinea pig. We also outsourced part of the problem to Sleuth to extract metrics from various data sources like Github and Azure DevOps.

After the initial delivery, the Platform Engineering org took ownership of the DORA metric as one of the services they offer to the rest of the org going forward.

I moved on to something more interesting: reliability metrics. You see, since 2021, DORA has a fifth metric that doesn’t get as much attention as DF, CFR, LCT, and MTTR.

It’s our friend SLI!

If you’ve been following my work, you know that I’ve covered everything from SLI, SLO, and SLA to terms that I coined like SLS! Hell, my book is on that topic! This is how it all started!

But before starting with SLI, I had to fix one problem: software catalog!

Remember that crowdsourced Notion Database? Yeah, turns out crowdsourcing is error prone because:

The data should be manually updated as the shape of the architecture changes and new services emerge while others are sunset.

The data was impartial because I had to literally go to every corner of the organization, introduce the need, interrogate them, and document what I learned and ask them to complete it. That is, if they even felt comfortable with the transparency that this kind of initiative demanded.

The Notion Database was collapsing under this level of load! I had a couple of sessions with their product managers and even met one of their engineers who was working on improving Notion Database performance. But they considered this way of using it as an edge case and wouldn’t optimize for it.

We had to move that data out of Notion.

We needed something better. I needed to systematically go through our components and move each team through a Kanban swim-lane from introduction to SLI/SLO, to measuring them using observability tooling, to tying their commitment (SLO) to alerts and on call.

It was around that time that some of my colleagues introduced Backstage to the mix. Backstage is an open source IDP (internal developer platform) and one of its key features is software catalog.

I started fetching the data programmatically from the Notion API to generate software-catalog.yaml files and push it as PRs to Github repos. Backstage would in turn take those files and show them in a clean directory.

While I’m coding the above, a developer showed up out of nowhere and offered to help. Turns out he saw what I’m up to in my open TODO tasklist (I know it’s almost 2 years I said I’ll write about it, but haven’t published yet 😄).

Together, we migrated the entire system catalog database from Notion to Backstage. He was a competent coder. After the initial onboarding and giving him access to Notion and Backstage, my involvement was shrunk to writing the specifications for how our custom fields should be mapped to Backstage labels, tags, and annotations.

Our ETF was 2 people, and we migrated 400 components and systems. He also created a Slack bot so we could use automation to harass people at scale to double check the automatically generated software catalog entries.

The rest of my time went to massaging org leaders to adopting service levels while carrying SLO workshops team after team.

Another person showed interest in running those SLO workshops. Initially he took the back seat on a couple of workshops. Then we switched seats, and he ran the workshop while I was observing. After a while he ran workshops on his own.

Although the ETF was 3 people, the other two rarely interacted because they didn’t need to. One was coding the catalog while the other ran the workshops. I was involved in both.

Currently I’m running a larger ETF to roll out a unified observability stack across the company while establishing a unified on-call response process and tooling. I’m taking notes and will for sure publish the learnings.

Let’s shift gears and look into some of the common pitfalls of ephemeral taskforces and how to tackle them but before that:

Pro tips: common pitfalls and mitigations

We’ll discuss the following pitfalls:

Picking the wrong people

Unclear delivery, Scope creep or “gold plating”

“Home team” sabotage the ETF priorities

Disbanding the ETF too late

more

The pro tip section is a token of appreciation for those of you who support my work. My monetization strategy is to give away most content for free. However, these posts take anywhere from a few hours to a few days to draft, edit, research, illustrate, and publish. I pull these hours from my private time, vacation days and weekends. The simplest way to support me is to like, subscribe and share this post. If you really want to support me, you can consider a paid subscription. As a token of appreciation, you get access to the Pro-Tips sections and my online book Reliability Engineering Mindset. You can get 20% off via this link. Your contribution also funds my open-source products like Service Level Calculator. You can also invite your friends to gain free access. And to those of you who support me already, thank you for sponsoring this content for the others. 🙌 If you have questions or feedback, or you want me to dig deeper into something, please let me know in the comments.