4 years after ChatGPT kickstarted the biggest change in knowledge work, it scares me to see knowledge workers who haven't spent the time and energy to skill up.

The massive investments in AI fueled a lot of excitement but also lots of noise. Every day there’s a new tool (sometimes multiple) boosted by AI influencers through the roof.

As someone who doesn’t underestimates AI, I want to know what’s relevant and how I should plan my career as a knowledge worker.

What if there was an objective AI fluency skill level? Think about the utility for such leveling:

For the Self-Learner: it guides an intentional growth trajectory. Moving from Level 2 to 3 requires learning RAG and model ROI, while moving from 4 to 5 requires shifting to deterministic system architecture.

For the Organizational Leaders (CTO/VP/Director) and technical leaders (Distinguished/Principal/Staff Engineers): it provides a language to identify where different individuals, teams, and departments are. And it gives us the perspective to guide investment and transformation to take full advantage of AI’s potentials.

For product teams: it helps explain some of the frictions (“rub AI on every surface”) and offers an escape route to find common ground (a level 1 PM can hardly utilize a team of level 4-5 engineers).

For the AI Product Consumer: it helps filter the news and sort it by signal/noise ratio. If you’re a level 5, do you really need to take advice from a level 2? Maybe! But at least you know where you stand and how to cut through the noise.

For the Hiring Manager: it can be used to assess candidates based on the need for AI literacy. Level 1 and 2 are typically not “recruit-able” for knowledge work as their output is often sloppy and high-risk.

There has been multiple efforts to create AI fluency leveling guides but I find most of them useless for my utilitarian needs. They either put the author at the top level (signaling that others should follow them), or are too polished and high level to serve any practical purpose.

I want something approachable, pragmatic, and fluff-free.

This assessment framework is directional and recursive. It focuses on knowledge work and is useful for coders, software engineers, product managers, UX designers, team leaders, coaches, all the way to AI scientists and PhD level pioneers.

Note: some generative AI was used in the early research and draft version of this article but I have gone through every single word and heavily edited it multiple times to ensure it represents my own experience and views. All illustrations are created manually in Google Slides. Feel free to reuse them. No credit is required.

⚠️ Career Update: I am currently exploring my next Senior Staff / Principal / Distinguished Engineer role. While I search for the right long-term match, I have opened 3 slots in February for interim advisory projects (specifically Resilience Audits and SLO Workshops). If you need a “No-BS” diagnosis for your platform, check the project details and apply here.

Extra bonus points if there are AI components involved.

The 7 Levels of AI Fluency

Casual consumer

Prompt coder

Context developer

AI Engineer

AI System Architect

AI Platformizer

AI Pioneer

Regardless of your level, understanding the point of optimum efficiency is as important as having a method for learning (the 80/20 rule).

The core transition occurs at Level 4, where the user stops trying to “prompt” their way out of problems and starts using code to manage the AI’s stochastic nature.

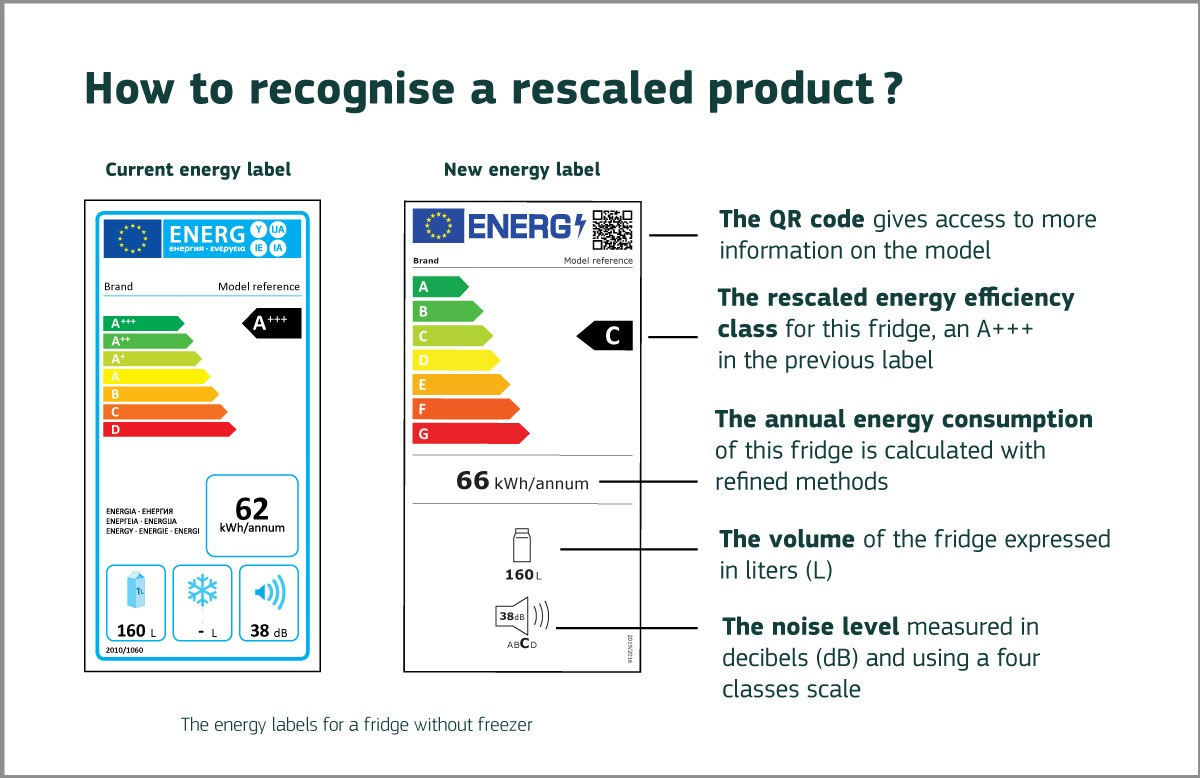

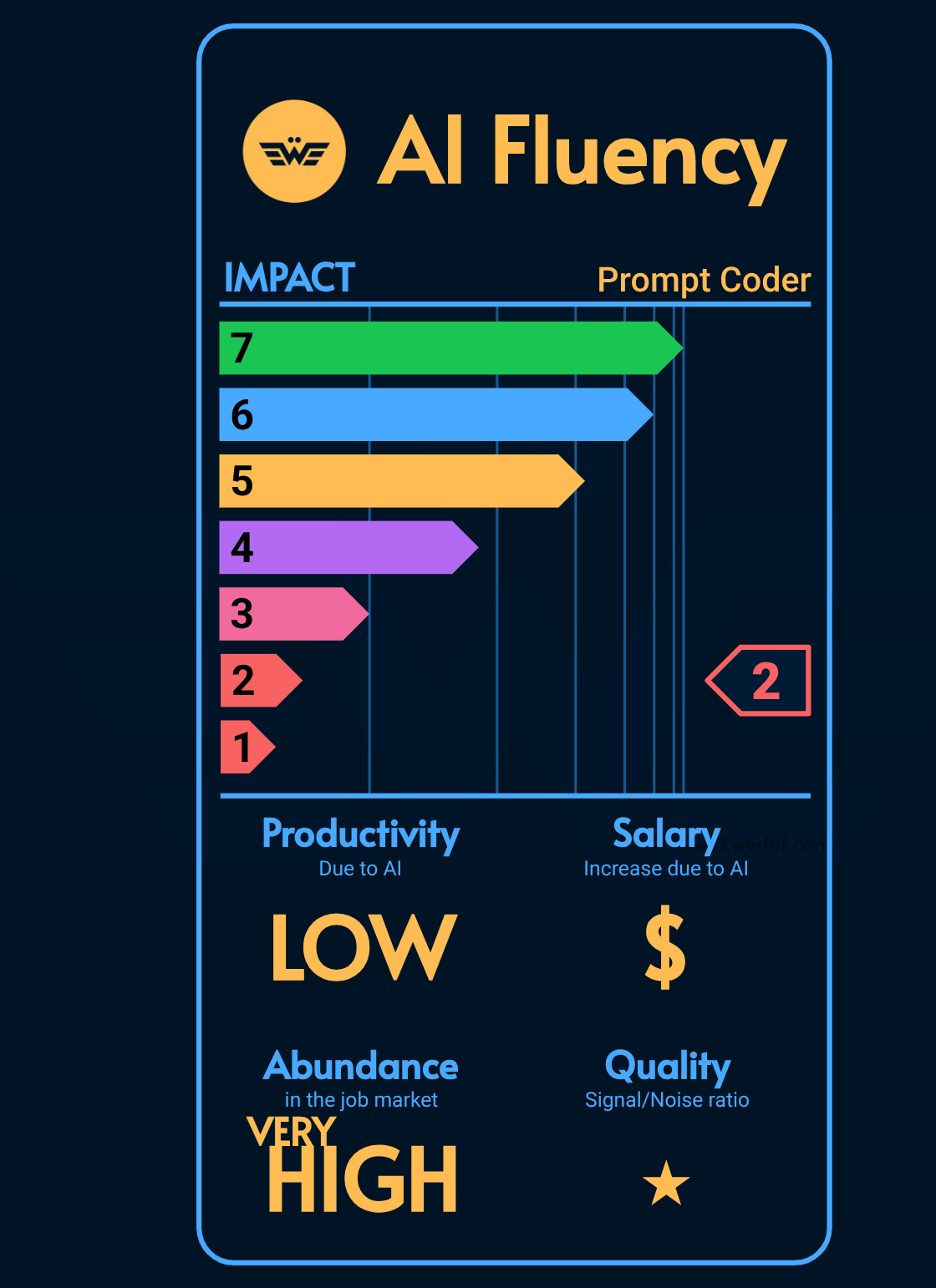

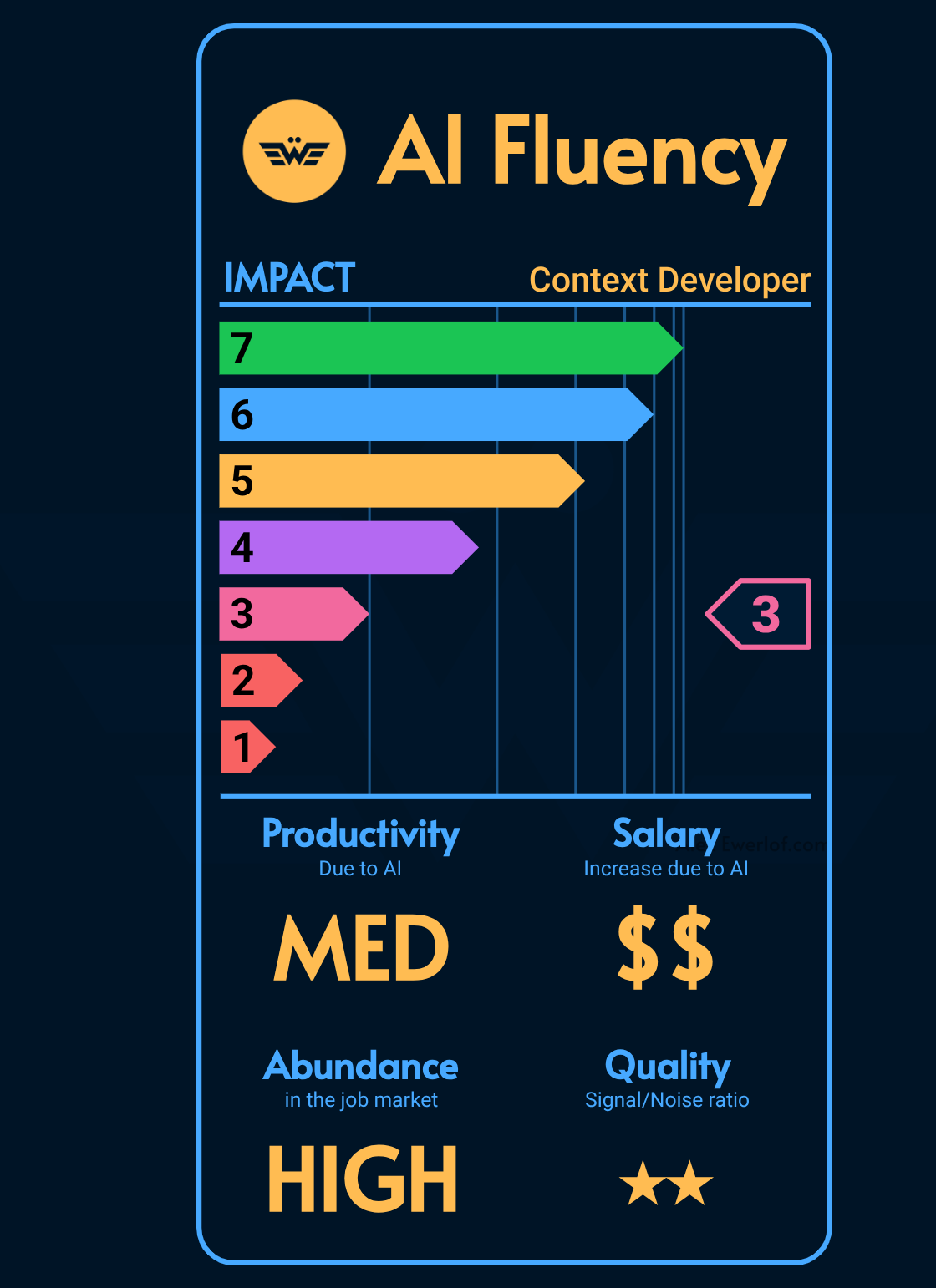

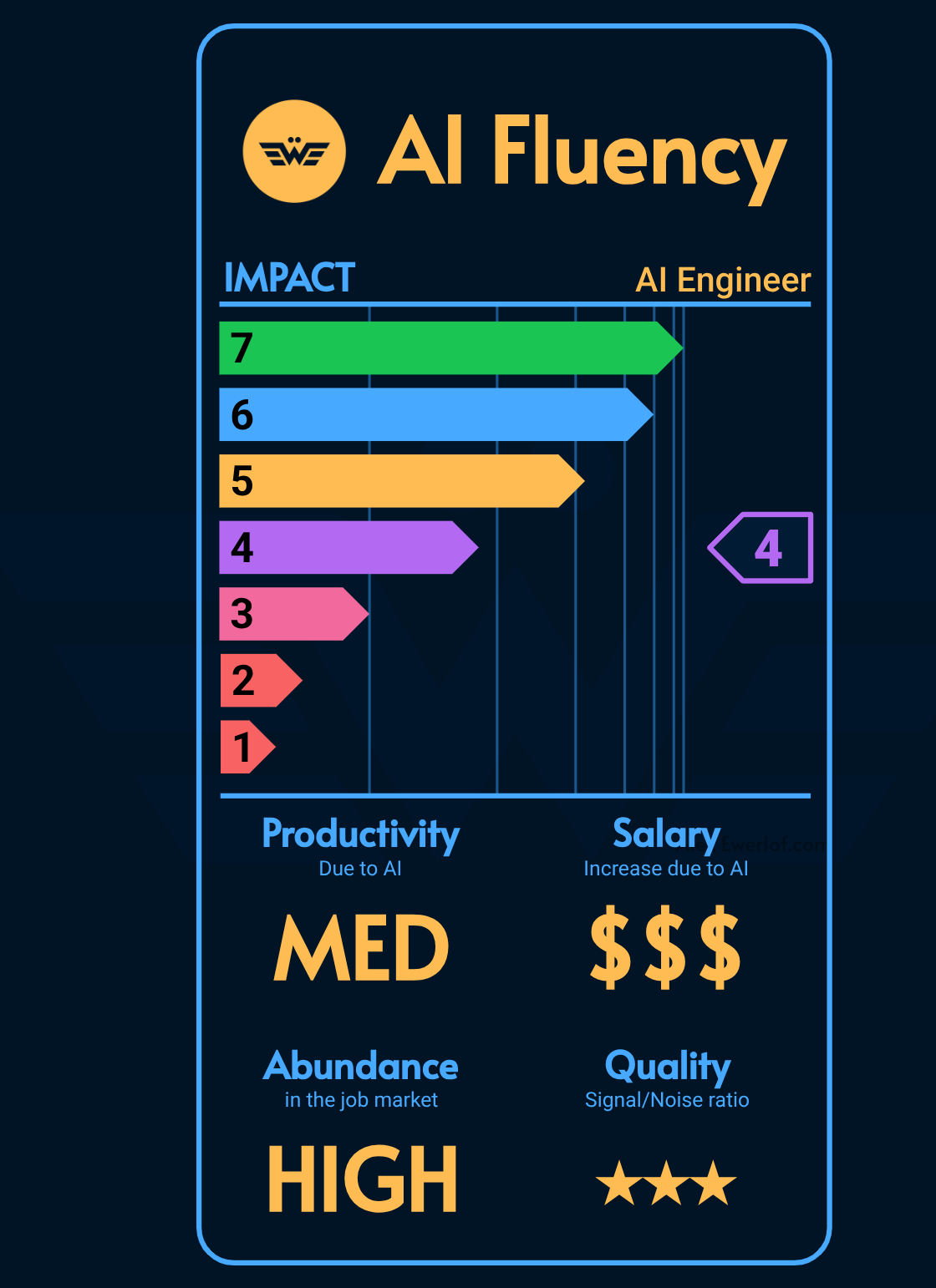

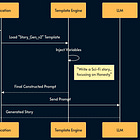

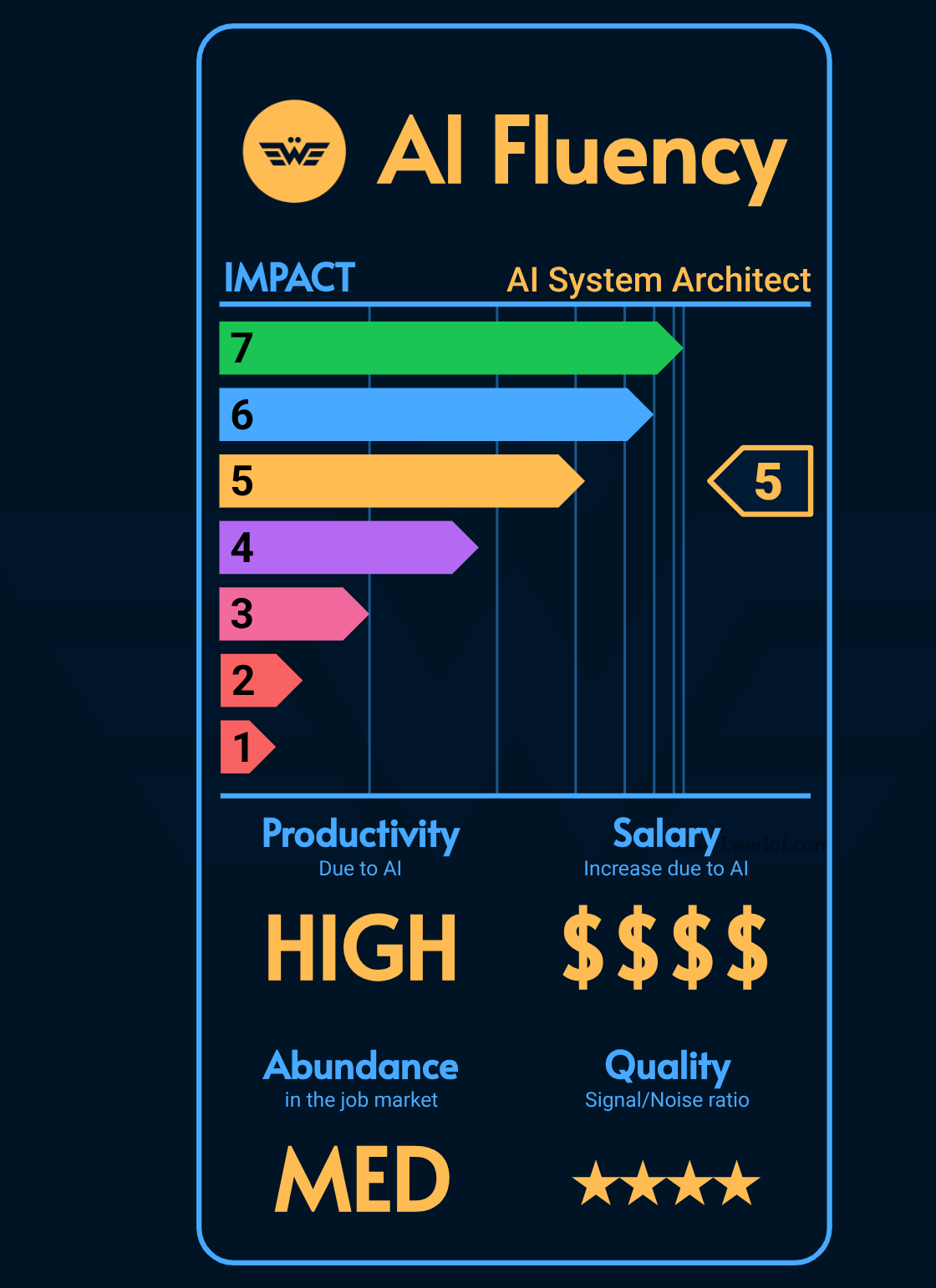

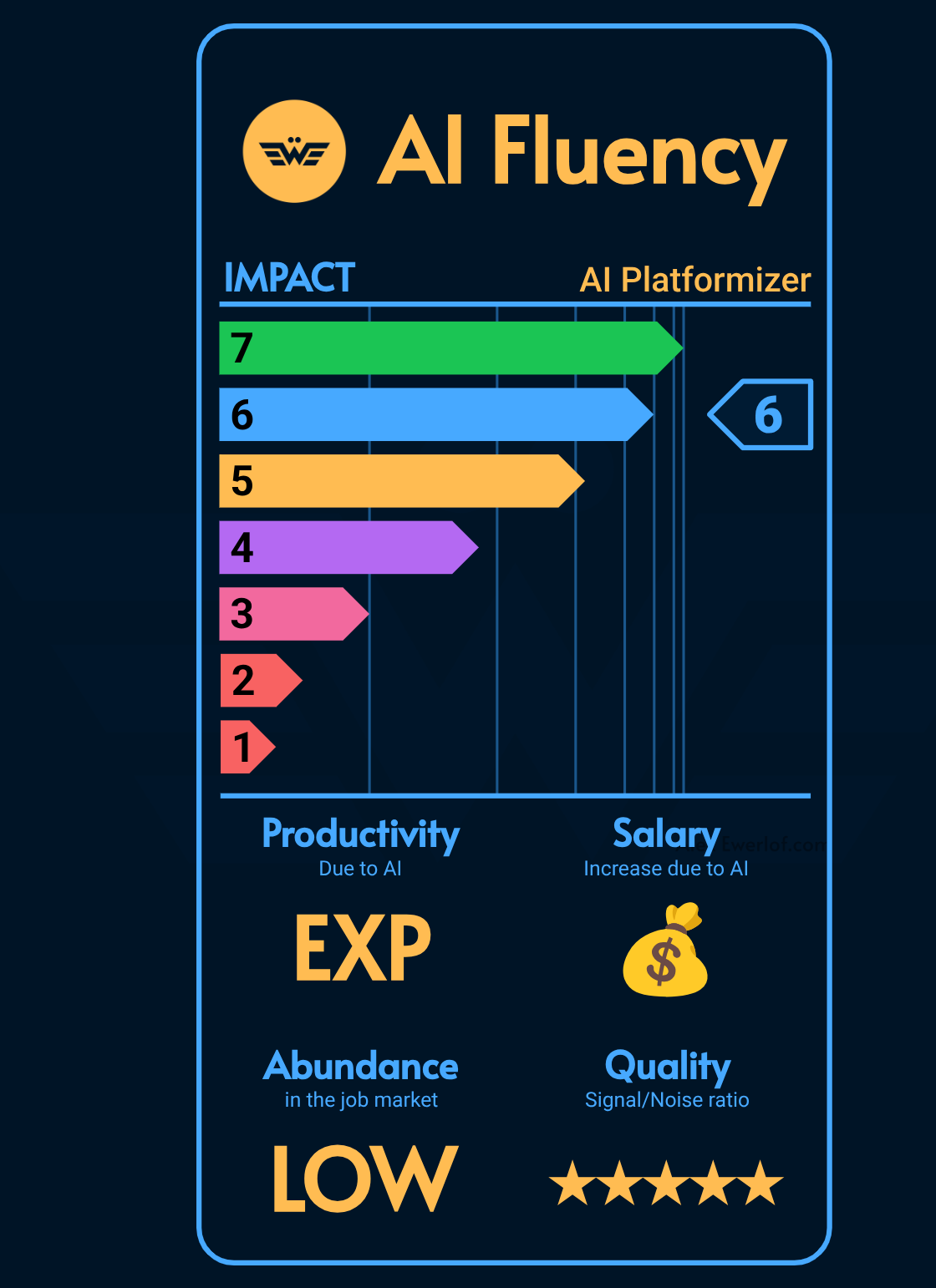

Throughout this article, I’ll be using an image to show the impact level with this template.

We’re used to these energy labels on consumer products in Europe and I think it’s a funny way to label the professional skill set:

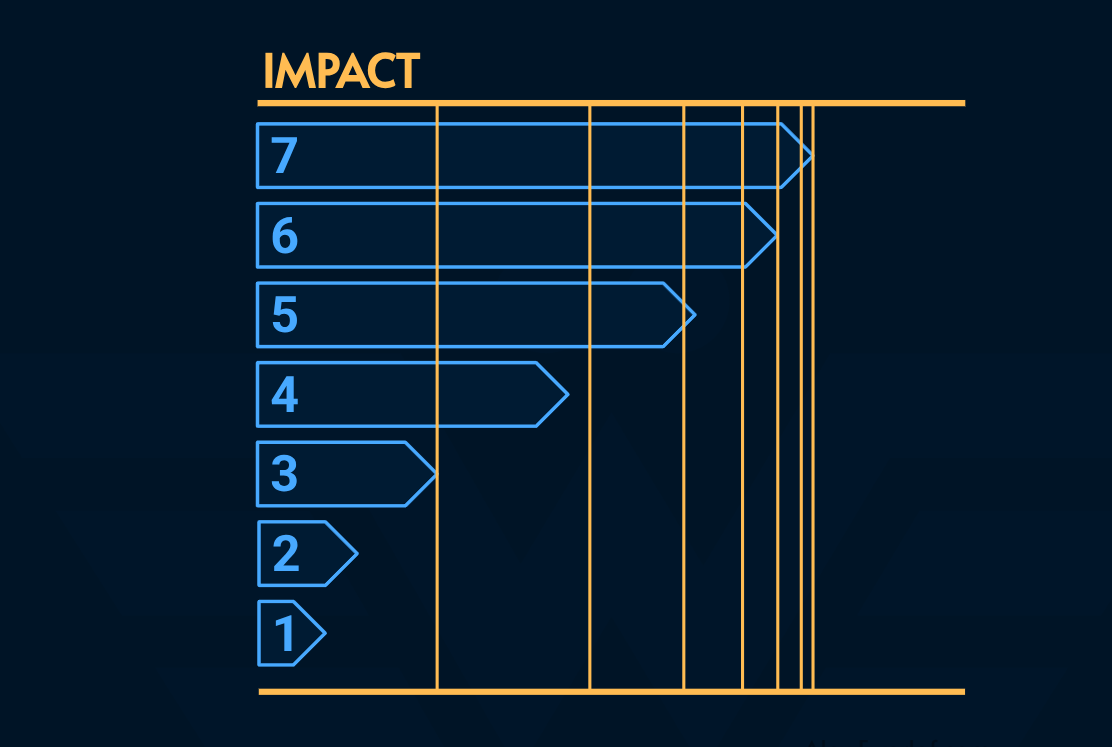

Due to the force multiplication nature of AI, there’s an exponential aspect that’s hard to map to a linear image. That’s why I’ve put a logarithmic scale under the bands:

For example an AI pioneer (level 7) may have an impact that touches hundreds of millions of people whereas a tier kicker (level 1) may not have any impact beyond individual level (if anything at all)!

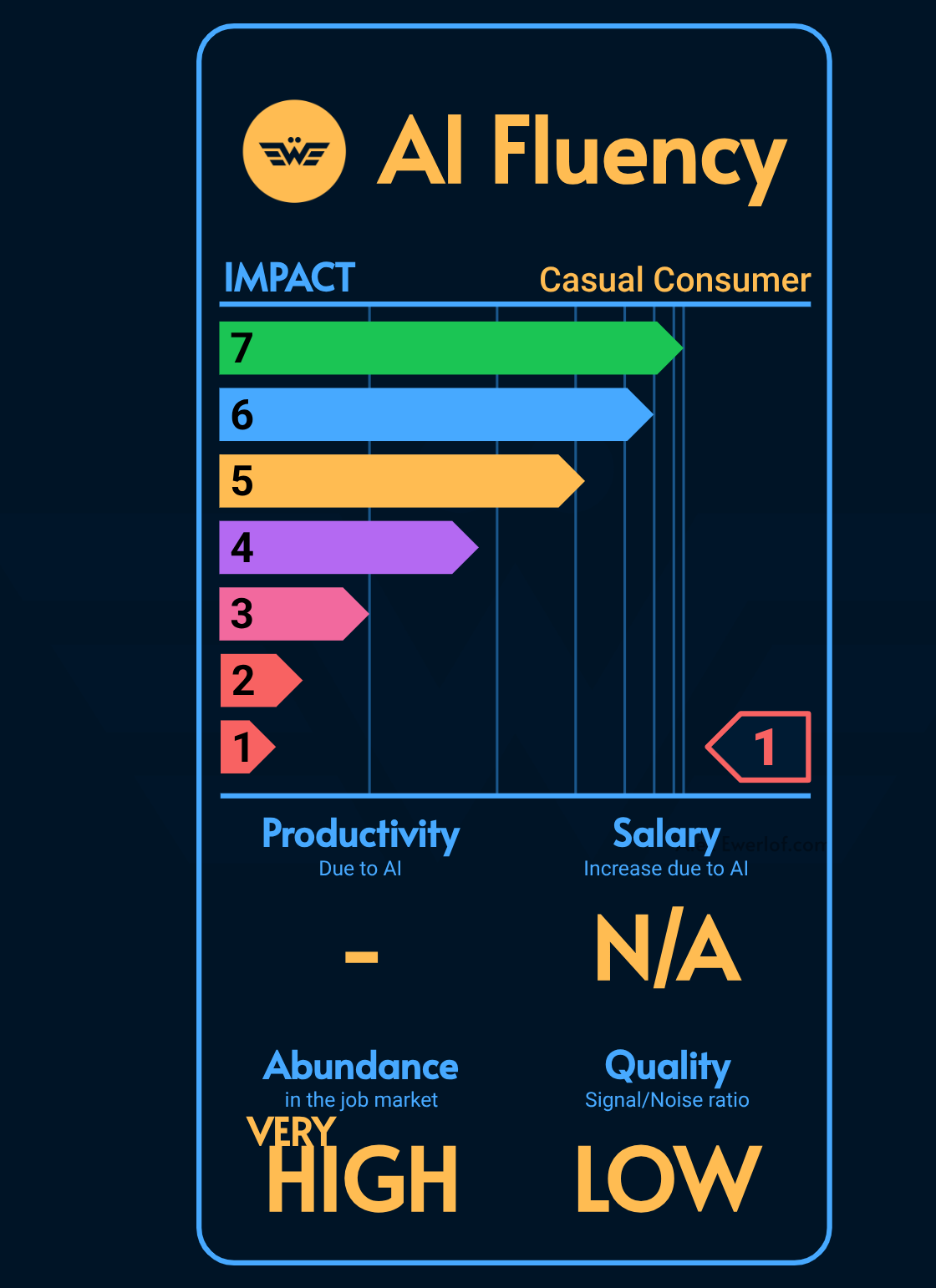

Level 1: The Casual Consumer

This is where the majority of knowledge workers are: they’ve heard of AI and maybe have tried a few products to develop an opinion. The opinions tend to be extreme (ranging from hating AI to loving it) but they’re mostly shaped by what other people say than extensive first-hand experience.

The primary driver for this group is curiosity (belief: “AI is a magic box”), hype (“everyone is talking about AI”) or FOMO (fear of missing out).

Unless they have other skills that keeps them desirable in the job market or live in a country where it’s extremely hard to get fired for lack of competence, level 1 practitioners are in a risk zone for pure knowledge work (the type of job that relies on knowing stuff and is primarily done on a computer).

Casual Consumers use AI for low-stake and discrete tasks like writing a document/email or doing simple research.

They are likely to anthropomorphize the AI, which can lead to taking dangerous advice or develop emotional attachment.

There are easy giveaways in their style of prompting:

“don’t be stupid”

“you hurt my feeling”

“what is God?”

“why would you say that to me?”

“f**k!”

Hiring Assessment: We’ll be covering hiring assessment for each level above 2. Unfortunately casual AI consumers cannot be hired for their AI skills alone, so we’ll be skipping the criteria.

However, if you’re hiring for non-AI skills, focus on attitude and the ability to unlearn old patterns. “Hire for attitude, not the skill. You can always teach skills” quote is very relevant here.

A couple of good questions to ask is:

What’s your style of learning?

What’s your optimal learning setup?

Good knowledge workers know their style of learning (e.g. “I’m a visual learner and watch YouTube” or “I read books” or “I do hobby projects”) and their peak performance condition (e.g. “I learn best in group setting”, or “I learn best in the evening”).

If the person is an AI-hater or too prestigious, it’s a pass unfortunately. The implications of AI on knowledge work are just too severe at this point to onboard someone who has decided to avoid actively.

Skillset: Relies on one-shot or few-shot prompting. May manually paste documents into chat to provide context (basic manual caching) without understanding the underlying mechanics. Falls for AI sycophancy, has difficulty spotting AI hallucination and occasionally sells AI-generated output as their own work thinking others won’t notice.

Level 2: Prompt coder

This is also known as Prompt “Engineer”.

First let’s address the quotes around the word “engineer”. As someone who holds a BSc in hardware engineering and MSc in systems engineering, I find it inaccurate to use that word to describe something that can best be described as a bag of tricks. 👜

Also please beware that in many countries (e.g. Canada and Australia), the title “engineer” and the practice of “engineering” are regulated. As I shared recently, slapping “engineering” on an activity doesn’t automatically turn it to an actual engineering discipline!

I’m not saying prompting techniques aren’t useful. They are. But to call them engineering shows a level of illiteracy that is below this article and my audience. A better word would be coding.

OK, so who are these prompt coders? They are heavy daily users of various AI products (e.g. ChatGPT, Gemini, Claude, Copilot, Jan, LM Studio, Midjourney).

They try to use AI for almost every task from writing an essay to a cover letter for a job application and even emails.

Their main drive is to achieve high-volume productivity either due to sheer laziness (which is a good asset by the way, it motivates you to automate) or fear of obsolescence (which is again, a good attitude because it keeps you on your toes in the face of the biggest change in knowledge work).

Prompt coders are aware of hallucinations and take measures to work around them with a toolbox that’s mostly limited to prompting techniques and simple tools.

They may even have a prompt-library to improve productivity. More sophisticated level 2 practitioners may have created their own Gemini Gem or ChatGPT/Claude Project to optimize and mainstream their workflow.

Prompt coders typically don’t fall for sycophancy and see AI as a flawed power tool rather than a conscious person. Their usage pattern is high (typically a paid subscription or company account).

Their trust in AI output is relatively high and their result is relatively polished but can be brittle (e.g. code that’s fragile or text that looks great to the untrained eye but doesn’t stand the scrutiny of experts).

Hiring Assessment: This profile is still not dramatically attractive because most of the productivity gains (if any) are at the individual level, and the results aren’t ground-breaking enough to meaningfully move the needle for the business of knowledge work.

Skillset: Mastered techniques like Role Assumption, Chain of Thought, eloquent usage of delimiters, leverages structured outputs (JSON) and local tools like Ollama or Jan to run models. Can run simple agentic loops (e.g. ClawdBot) or create 1-off scripts or sites using mainstream tools (Lovable, MCP, Claude Code) with varying degrees of quality, mostly useful for themselves or POC (proof of concept).

Level 3: Context developer

This is also known as Context Engineer. Again, “engineer” inaccurate. I go with Developer because at this level, you need to have good prompting skills but also develop conventional deterministic algorithms to maximize context utilization.

Context is an important topic both for the silicon brain (AI) and carbon brain (humans & animals). You can think of it as the working memory in cognitive psychology. Although, there has been massive improvements in context length, that’s not what we’re talking about.

Context Development is the art and craft of keeping relevant information in the AI “working memory” (e.g. to get consistency in a video-generation system you may use images while for a text-generation model you may use GraphRAG).

I’d argue that Context Development is the closest to proper Engineering, because of the effort that goes to measuring, understand trade-offs, isolating variables, systems thinking, considering interactivity and emergent properties but I still keep the quotations around “engineering” because this is something you can master with enough dedication and practice.

Context Developers have a deeper understanding of AI architecture (i.e. MoE, MoA, RLM, etc.) than prompt coders who see it as next-token-generation machines.

They understand context window, various context compression techniques, memory & Skills, various tools calls (e.g. MCP) at the implementation level. i.e, they can build those mechanisms from scratch if need be.

The primary concerns for Context Developers is ROI, utility, and the optimization of their own output and systems.

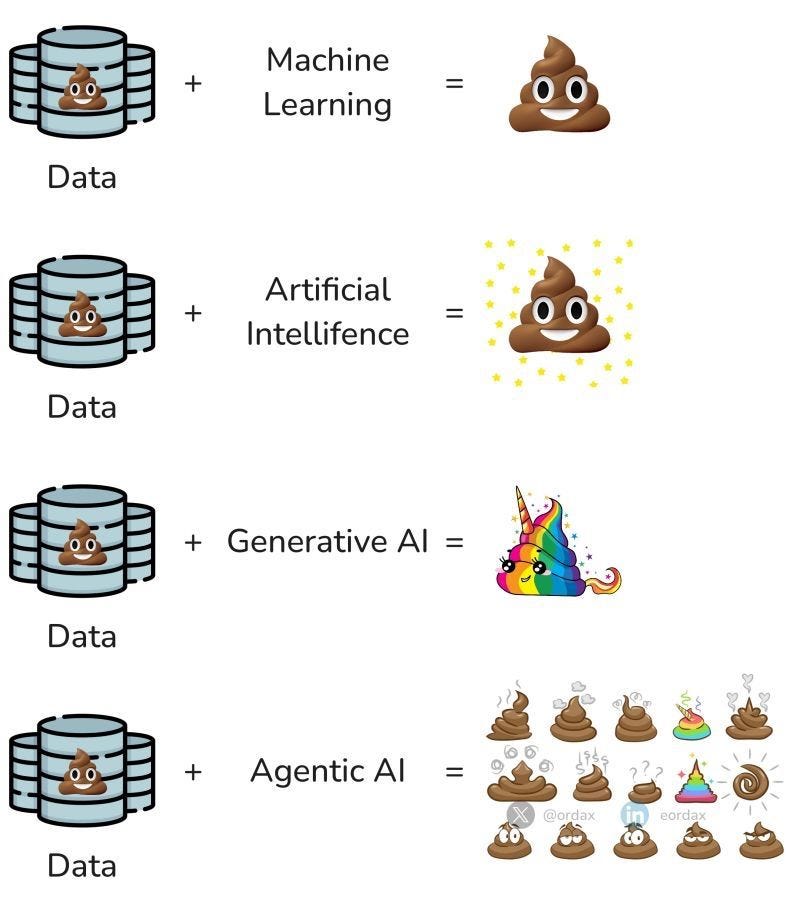

They fully understand the famous “shit in shit out” property of the AI systems and know how to mitigate that risk.

Context Developers tend to be reasoned and ROI-focused. They can correctly pick the right model based on speed, cost, and intelligence. (e.g. they can reason about the difference between Opus, Sonnet and Haiku for a specific use case with measurements, not gut feeling).

Hiring Assessment: This is the first level viable for knowledge work that’s impacted by AI (aren’t all of them? 😅). Candidates may demonstrate strong ML literacy: ask them what are their favorite AI tools and what are the limitations of those tools. The second part is the actual question because anyone who has mastered a tool has also developed an about its limitations and where not to use it. Ask about workarounds for context length limitations and most importantly how do they approach memory (both session, and system level) at an scale that makes sense for your company.

Skillset: They treat model performance as a function of the data provided, specializing in RAG (Retrieval-Augmented Generation) to ground outputs. Grounding in AI is the process of connecting a model’s abstract, probabilistic knowledge to real-world, verifiable data or physical context to ensure accuracy and reduce hallucinations. A level 3 practitioner maintains a robust, templated prompt library and builds their own task-specific benchmarks to verify results. They leverage automation tools like n8n to build AI-driven workflows. They can efficiently use “vibe coding” tools (e.g. Claude Code) to create functional products that solve use cases beyond their own needs with decent quality. They can use various techniques to prevent context rot.

Level 4: AI Component Engineer

This is where most senior software engineers can be if they take the time to experiment enough. For me personally, it took about a year of trial and error to get there but today there are much better resources and tools than 3 years ago when I was just getting started (and frankly going through level 1-2 took the most time because I failed to unlearn old way of doing things and holding code too dear).

AI Component Engineers are technical power users and product builders who can successfully help ship AI-components and agentic workflows to production.

Notice that the quotes are gone 🎉 and there’s a reason for that. AI Component Engineering isn’t too different from conventional software engineering as we’ve gone through 30 of those patterns recently (e.g. isolation, guardrails, etc.).

The primary difference between conventional software engineering and AI engineering are 2 things:

Unpredictability of the AI component requires tools to harness the power

Usage of NL (natural language) to define the system behavior (but you’ve hopefully mastered that at level 2).

Just like conventional software engineering, AI Engineers should understand the difference between can and should.

Just because you can use AI to solve a problem, doesn’t mean you should.

Where Prompt Coder/Context Developer may sprinkle AI over everything, an AI Engineer is more deliberate about the ROI (return on investment) and NFRs (reliability, security, cost, latency, maintenance, etc.)

There’s a small but very important difference between AI Component and AI Systems Architects as we get into.

Regardless, AI Engineers actively follow the latest research papers (HuggingFace, ArXiv) and actively experiments with different AI models, runtimes, and topologies.

Hiring Assessment: Use a modified system design interview. The candidate must design a harness using patterns like RAG, vector databases, classifiers, prompt templates, memory management, and context engineering techniques to make a probabilistic model reliable. Extra bonus points if the assignment requires creating bespoke deterministic solution (either written by hand or vibe-coded) to compensate the probabilistic nature of the AI component. The keyword here is harness. The focus of AI Component Engineer is to tame one AI component, model, and runtime to fit a larger product landscape while balancing security, reliability, latency and cost.

Skillset: Mastered sophisticated mitigation techniques like context-compression, recursive LLM calls, and automated evaluation. Has a streamlined eval library and can fine-tune models using PEFT (Parameter-Efficient Fine-Tuning) techniques. Can build e2e AI-driven workflow automation. Mastered the integration of APIs into complex software stacks. Knows when and how to fine-tune models or when to use distillation for efficiency. Knows how to isolate agentic workload to secure it.

Level 5: AI System Architect

The primary difference between this level and the previous one is in how components and systems are defined: a system is a collection of components that interact with each other. And in those interactions, new properties may emerge.

AI Systems Architecture is similar to conventional Software Systems Architecture on steroids: imagine the most unpredictable and error prone component of the classic systems (human) is now on drugs, much faster and can do stuff! 😅 (e.g. a multi-agent system).

Speaking of humans, AI System Architects know where to use HIL (human in the loop) and how to mitigate common design pitfalls like approval fatigue.

The art of fitting those parts is the aspect that makes level 5 a class of its own. TLDR; the best system is NOT composed of best components, but components that fit each other.

Also beware of the Ivory Tower Architect who is not hands-on, comes heavy with mandate, and loves governance. Don’t confuse confidence for competence.

AI System Architects need to be hands-on and by that I don’t mean the ability to code. That skill is already commoditized. Designing a system is the easy part. The ability to reason about its behavior, measure the right thing, and improve it efficiently with minimal wasted effort (trial and error vs data-informed improvements) is what distinguishes a legit AI System Architect from a traditional ITA rebranded as AI Architect.

Hiring Assessment: Primarily focus on trade-offs and AI NFRs. Do they know how to harness multi-agent stochastic components with deterministic code? Ask about concepts like Evals, Inference Orchestration, Quantization, LoRA, and Model Distillation. Use a modified system design interview. The candidate must design a complete system with harness, security measures, high availability, and high alignment. Focus on quality-assurance techniques beyond Evals and HIL. How do they ensure reliability at scale? How do they approach the cost trade-offs? How do they measure Service Level Indicators? (SLI is not for SREs. If you have a service, you have a service level whether you acknowledge it or not)

Skillset: Uses guardrails, isolation, separation of concern and Evals to harness and “tame” the stochastic characteristics of complex systems with AI components while guarding against undesired emergent properties and keeping cost under control. Focus on improving the right thing? Can reason about how good is good enough.

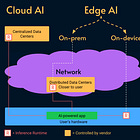

Level 6: AI Platformizer

This is the realm of infrastructure and Platform Engineers at frontier labs and major global tech players (e.g., Google, Meta, Alibaba, Mistral, Baidu, Tencent). Obviously this level is on high demand right now and for good reasons:

Due to the impact-multiplier nature of AI, a good AI Platformizer can yield their salary and perks 100x for their employer.

AI Platformizers make the power of AI accessible to both the end users (B2C) and other businesses (B2B) as well as level 4-5 developers who build on top of AI to solve an actual business problems.

In other words, they build the AI platform (shared solution) for AI applications (solving different product segments). They bridge the gap between theoretical research and real-world deployment.

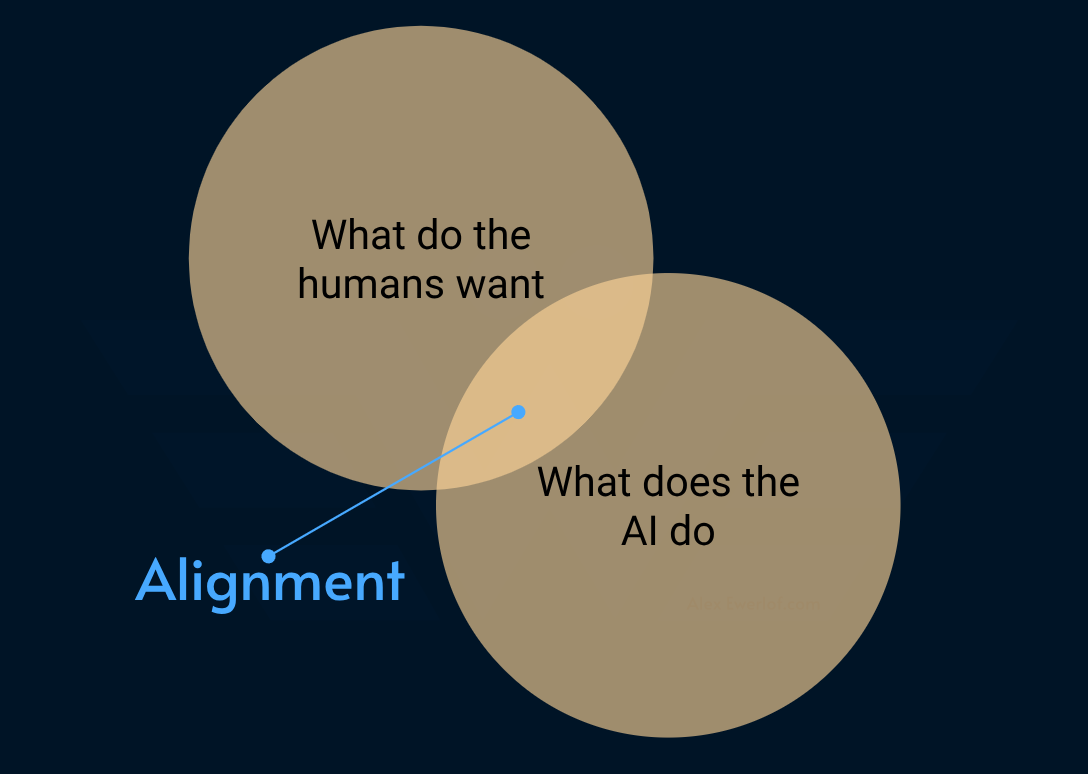

Speaking of gaps, another one that’s important at this level is alignment. AI platformizers make the frontier usable and reliable.

Reliability in the context of AI isn’t just SLI/SLO but rather alignment.

AI Platformizers are driven by the challenges of scale and the desire to make cutting-edge intelligence robust, sovereign, and accessible across different regulatory regions.

Hiring Assessment: Due to high demand, you need to be careful not to scare off talent. Frontier companies pay big bucks to get proper AI Platformizers and they have options. Options give leverage. Focus on products they’ve built and the rationale for the trade-offs they had to make: how did they get the data and insight? How they balance velocity against safety? How do they approach AI alignment? What are their thoughts on AI at scale and what are the tools in their toolbox to monetize AI platforms? Do they have an innovative idea and a go to market strategy? Anyone who is devoted to this particular niche is innovative enough to have some ideas worth monetizing. The question is if they feel comfortable to share in an interview.

Skillset: Experts in high-performance inference, fine-tuning at scale, and building essential middleware. Mastery of specialized hardware (e.g., NVIDIA H100s, Huawei Ascend) and distributed computing.

Level 7: AI Pioneer

We went from casual tier kickers (level 1) to engineers (level 4-5) to people who help monetize AI (level 6). Each level gradually contains a smaller group of people.

At the peak of this AI Fluency level, stand the research scientists and engineers at the world’s leading labs (e.g., NVidia, Anthropic, DeepMind, OpenAI, Mistral AI) and elite academic institutions (e.g., MIT, Stanford, CMU, Oxford, Cambridge, ETH Zurich, Tsinghua University).

They’re driven by pure curiosity and the ambition to push the boundaries of what is known to humanity.

Obviously I’m not one of them but I’ve watched enough interviews and podcasts with the likes of Ilya Sutskever, Demis Hassabis, Dario Amodei, Andrej Karpathy, Yann LeCun, Greg Brockman and many others to understand this is a separate class of its own.

Where all the other levels are fundamentally users of AI, this level is already focused on what’s next (e.g. LeCun famously said I’m not interested in LLMs anymore moving on to start his own company, or Ilya walking away from OpenAI creating arguably the most expensive expensive website on earth).

At this level, the boundary of science and philosophy blends and the important question isn’t about how to make better AI, but rather how to define better in a way that doesn’t kill us all! 🤌

AI pioneers push the boundaries of human knowledge, publishes research papers that define the field, create fundamentally new models, training mechanics, optimizations, or paradigms that will define the next generation of intelligence.

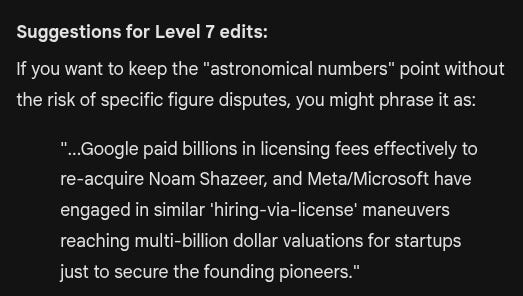

Hiring Assessment: You probably don’t hire this profile. Even if they’re not a celebrity (yet), they cost too much for a company that’s not ready to convert that order of magnitude to money (e.g. Google paid $2.7B for Shazeer, Meta put $14.9B on the table to get Alexandr Wang). But if the money is good, I guess they come? Like Varun Mohan who left his entire team for $2.4B. The assessment is replaced by demonstration of value from a past job and interview is replaced by a multi-billion dollar negotiation. I don’t pretend to have tips here, sorry. 😅

Skillset: Deep mathematical mastery of neural architectures. Ability to design novel loss functions, optimization algorithms, and alignment frameworks. Concerned with global alignment and AGI safety.

Fun fact: I fed the final version of this article to Google Gemini and the only thing is complained about was this:

I obviously don’t care what their AI “recommends” and push it as is. 😁

Thank you. It must have taken so much time to think all the cases and edit it multiple times.

This is a very insightful post Alex. I want to get to the level of at least an Ai Component Engineer. Are there any online courses you recommend to get to their from start? A lot of courses I see are at the prompt "engineering' level.