Degradation vs disruption

What's the difference between service degradation, service disruption, and service outage and why does it matter?

In the context of reliability engineering, there are three terms that are related but sometimes used incorrectly.

Loosely speaking:

Service degradation is when the quality of the service drops.

If the service entirely stops, it’s a service disruption.

If the disruption takes too long, it’s a service outage.

That definition lacks nuances. Let’s dig into four aspects of service levels to distinguish the difference with some examples and illustrations.

1. Availability

A service usually has multiple capabilities. For example:

Amazon Web Services include many different services like databases, runtimes, queues, identity and access management, etc. It also has some other capabilities like documentation, and pages like terms of service, which are essential (and some of them are legally required) but don’t have any purpose without the core services of AWS. There would be no documentation if there were no AWS services!

Core capabilities are the ones that drive the business. For GitHub, it’s the git servers, for a car, it’s the ability to drive (and brakes), and for a dish, it’s edibility! The core features define the service.

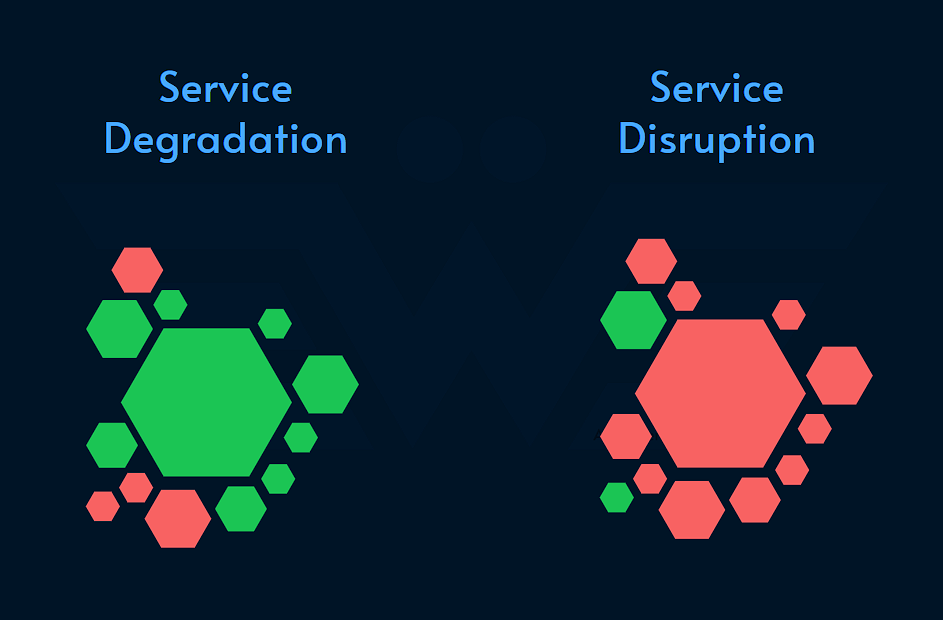

In case of service degradation, the core capabilities are still available although with poor quality of service.

For example, in the early days of ChatGPT, the “AI” failed to carry a conversation and would randomly break. This is a service degradation because although it hurts the user experience, the site is still usable for some (or if the same user retries).

The emphasis here is on core capabilities that define the service. If only less important capabilities are affected (e.g. AWS terms of service pages are not accessible), it’s still considered a service degradation because the main service is not disrupted.

Graceful degradation patterns (like fallback) may temporarily switch to using an alternative backup strategy for business continuity.

When the key capabilities are completely stopped, there’s service disruption.

For example, when AWS S3 was disrupted back in 2017, all GET, LIST, PUT and DELETE requests were failing which rendered this service completely useless. This also impacted other AWS services which internally depended on S3.

2. Service Level

If you are measuring reliability using Service Level Indicators (e.g. latency, error rate, data freshness, etc.):

Service degradation shows itself as burning error budget. The Service Level Status (SLS) drops below the Service Level Objective (SLO).

Service disruption shows itself as an error budget burn out: burning the error budget too fast for a longer period of time.

For example, in 2018 GitHub experienced a service degradation with some internal systems leading to displaying out of date or inconsistent information to users for a bit over 24 hours. If they had a SLI for data correctness, they would be seeing a rapid burnout that took 24 hours to recover.

However, since their main git service capabilities were untouched, it wasn’t technically a service disruption.

3. Blast radius

One of the defining factors to distinguish between service degradation and disruption is to ask:

Who is affected by the issue?

Is it just some users? Or all users? Is it the paid customers? Or the free customers?

If only some users are impacted, it might be a case of service degradation. But how many is too many? The answer depends on the consequences and the business appetite to take that risk.

For example, many cloud providers use compartmentalization using separate data centers (regions) to decouple risks and localize it.

If Microsoft Azure goes down in South India (Chennai) region, it doesn’t impact services that are running in other regions.

But if there’s a big Cricket match going on, it can potentially lead to massive free advertisement for AWS —not the positive kind! 😉

Another example is when an issue only affects a subgroup of customers:

Users on Android, Web, or iOS only

Users with uncommon configurations

Users on an older version of the software

Free tier users

Users in a specific city, country, or region

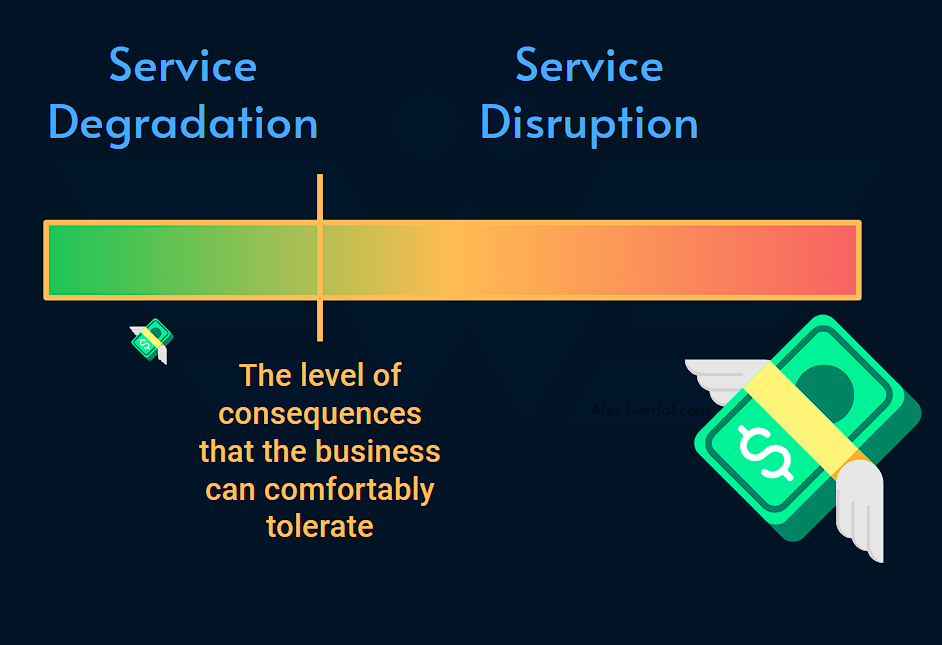

4. Consequences

Service degradation usually doesn’t have a big impact on the business. Some customers may be unhappy but not enough to leave. The business may lose some potential customers but not enough to go bankrupt. There may be a loss of reputation, some frustration on social media, and angry customer support calls but that’s usually about it. From the business standpoint, the consequences are negligible.

On the other hand, service disruptions have more severe consequences that go beyond “business as usual” or “cost of running the business”.

One of the main points of service levels is to shift the perfectionist mindset to acknowledging that complex systems fail all the time, so let’s identify what defines a failure (which shapes SLI) and what is a reasonable expectation (which shapes SLO) and at what cost (see the rule of 10x/9)

Service Outage

So far, we haven’t talked about the outage. That’s because outage and disruption are used interchangeably.

However, the word outage typically carries a more dramatic meaning and is used for longer and more serious disruptions with dire consequences. For example: SLA penalties, loss (sales, revenue, users, etc.), or legal consequences (accidents, people getting hurt, etc.).

How long and how serious? It depends on the type of product, customer expectation, and risk appetite:

If your home electricity is cut for 12+ hours, you may call that an outage. Here in Sweden for example, you’re entitled to compensation if that happens. In this cold corner of the map, many homes depend on electricity for anything from cooking and lights to warming the home. 12 hours is a very long time and can potentially put some lives at risk.

The 2011 PlayStation outage lasted 23 days and has its own Wikipedia page!

Back in 2022, Gergely Orosz covered the longest Atlassian outage of all time with an interesting insight: “The biggest impact of this outage is not in lost revenue: it is reputational damage and might hurt longer-term Cloud sales efforts for new customers. […] The irony of the outage is how Atlassian was pushing customers to its Cloud offering, highlighting reliability as a selling point.” Using the word outage (with all its dramatic connotations) is completely justified here.

For practical purposes when your dependency goes down, you can use “outage”. On the other hand, when your service goes down, you can use “service disruption” or even wrongfully call it a “degradation” to downplay the impact! 😄It just sounds fancier and more technical.

Conclusion

Reliability engineering is about preventing threats from becoming degradations and degradations from becoming disruption.

So, is degradation better than disruption? I have a question for you:

Think about an online retail store. Which one has a more negative business impact?

A degradation which leads to showing the wrong prices to the customers?

Or a disruption where the side is not accessible at all?

If you guessed degradation, you guessed right. While intuitively we may think that a degraded experience is better than total outage, showing wrong prices may literally cost the business more than the site being down and losing potential customers —who may in some cases come back after the side is recovered.

If customers buy the products below the price, the business loses money

If the site is down, customers cannot buy the products for the wrong price

Sometimes, it is better to disrupt a service than degrading it. The nuances are in the context, business model, risk tolerance, etc.

Before we close, I just wanted to highlight one point: One service’s outage may lead to another service’s degradation. If your team is providing services to another team in a loosely coupled matter:

When your service is disrupted, all your services are disrupted

but for the services that your consumer provides, your disruption might be just a degradation they have to handle (if they’ve done their homework).

My monetization strategy is to give away most content for free. However, these posts take anywhere from a few hours to days to draft, edit, research, illustrate, and publish. I pull these hours from my private time, vacation days and weekends. Recently I went down in working hours and salary by 10% to be able to spend more time learning and sharing my experience with the public. You can support this cause by sparing a few bucks for a paid subscription. As a token of appreciation, you get access to the Pro-Tips sections as well as my online book Reliability Engineering Mindset. Right now, you can get 20% off via this link. You can also invite your friends to gain free access.