Service Level April Fool's Joke

Dissecting a joke to debunk some myths surrounding Service Levels

Every year, I do an April Fool’s joke at work. Sometimes it’s public (like back in 2022), some other times it’s internal.

This year’s joke was rather technical and would make sense to anyone who has a good understanding of service levels, why they’re important, and more importantly how not to implement them!

I’m responsible for 100+ product teams and am confident that most people get the joke because in the last 12 months I & my partners in crime have supported them with the following:

Service Level Intro: a f2f meeting we build a common language to speak about reliability and answer WHY we’re doing it at the company. The point is to have a dialogue. After doing this for 80+ teams, I turned it into video for anyone to consume internally at their convenience. The intro is a requirement for the workshop.

Service Level Workshop: a 2-hour workshop where we systematically go through identifying services, consumers, risks, metrics, and converting them to SLIs. If there’s enough historical data available, we set SLO too. The workshop is one reason I made the Service Level Calculator.

Various documentation, templates and tools: Service Level Document which provides a uniform way to document service levels as a written contract between teams, loads of documentation & FAQ, system catalogue, dedicated Slack channel, etc.

Drill down: Sitting down with key teams responsible for critical systems and drilling down their architecture to ensure that their systems are resilient, and the operational aspects are in good shape (alerting, on-call, postmortem, incident process/document, etc.).

Given the penetration of service level across the org over the past 12 months, I am confident that the joke would be understood. In fact, anyone who didn’t understand would immediately flag themselves as being outside my circle of influence which means I have work to do.

Regardless, there’s a lot in that joke that is worth unpacking in public to learn about rolling out service levels across a large organization.

First, the raw joke. See how much you understand:

In preparation for the new CTO joining the company, we’re doing some house cleaning to make sure that we make a good first impression.

Accelerated SLO

One of the main reasons we implemented service levels is to acknowledge the team autonomy and hold teams accountable for key product metrics. If 2023 was about putting the SLO leash on teams, 2024 will be about tightening that leash. We call it Accelerated SLO or xSLO for short. Here’s how it works:

Volvo = six nines

At the top level, we aim for a xSLO of 99.9999% for two reasons:

Our brand is synonymous with safety and zero risk tolerance. We push the industry standards with 1 extra nine to demonstrate our commitment to this perception.

Our products are too expensive to afford any failure. We decided that we can have a maximum of 1 software failure in every 1,000,000 interactions for all products.

Regardless of the SLI, any team that has a SLO lower than 6 nines will collectively face the consequences in the policy.

SLO policy with teeth

A policy without execution mechanics is merely a suggestion. That's why we connect xSLO to the payroll system. The xSLO will be tied to team bonuses and the error budget will be paid from team member salaries. Here’s how it works:

The annual bonus will be calculated based on what is in your contract minus the part of the SLO that your team missed to achieve. The SLO that will be used is the top level xSLO, not the team level SLO. For example, if the bonus was supposed to be 10,000 SEK and your team’s service levels in the past quarter were at 85%, you’ll only get 8500 SEK bonus. Tying the performance metrics to tangible rewards has been one of the key ideas that big tech uses to improve the quality of service and we have no reason to diverge from that best practice.

The error budget will be tied to salaries. We’re now at the final stage of integrating our observability platforms into the payroll system and hopefully will be done before the CTO joins. When we’re done, any error budget will be directly deducted from the team’s collective salary during the same month. For example, if a team of 10 people causes two failed sales and the average sale makes 100,000 SEK for the company, the team must compensate a total of 200,000 SEK to the company which leads to 20,000 SEK deduction from each team member. This creates a great atmosphere where the team stays together and collectively takes responsibility.

CTO dashboard

We’ve prepared a CTO-dashboard where the teams and their performance are seen on one big table. Each row presents one team, and each column presents one month.

Any team that is below the 99.9999% xSLO target will be marked red. This allows quick assessment of the pulse of the organization so the CTO can focus on the outliers.

This also allows the CTO to benchmark teams against each other and interrogate the outliers why they cannot match the performance of the other the teams.

Dissecting the joke

A joke isn’t funny if you have to explain it but given the technical nature of this joke and the background required, let’s use it as a pedagogic opportunity to learn about Service Levels.

In preparation for the new CTO joining the company, we’re doing some house cleaning to make sure that we make a good first impression.

This first sentence may give the joke away. There hasn’t been any news about a new CTO.

The rest of the joke is a classic example of mandate abuse and I needed to tie the draconian policy to some sort of mandate-heavy role. I have seen these metrics being weaponized by CTO at other companies, so it felt appropriate to tie it to this role.

Plus, Volvo Cars currently doesn’t have a CTO which reduces the risk of this joke being escalated unnecessarily.

Accelerated SLO

One of the main reasons we implemented service levels is to acknowledge the team autonomy and hold teams accountable for key product metrics.

There’s a small but very important detail there: “key product metrics”. SLI is different from KPI (key performance indicator).

Service Levels are primarily consumed by the engineers to be on top of the reliability of the technical solution.

As we discussed before you should never be responsible for what you don't control. Engineers don’t control all the factors that contribute to a product metric like MAU (monthly active users), ARR (annual recurring revenue), churn, etc.

Sure, the engineers control the technical solution which contributes to those metrics, but they don’t have full control over market demands, competition, marketing, sales, etc.

Another way to think about it is on-call. A good SLI ties to alerts in order to formalize the team’s commitment to service reliability. It may make sense to wake someone up in the middle of the night if the site is down, but not if the market demand shifts overnight! To reduce alert fatigue, we aim to find SLIs that fit exactly to what the engineers control and not more.

If 2023 was about putting the SLO leash on teams, 2024 will be about tightening that leash.

Since April 2023, I was tasked to help my organization implement Service Levels by:

Building a common language to speak about reliability (SLI, SLO, SLA)

Measuring impactful metrics (Service Level Indicators)

Setting reasonable expectations (Service Level Objectives)

Improving operational excellence (on-call, incident handling, etc.)

I accepted this responsibility in 2 conditions:

Service Levels will never be weaponized: I have seen companies that abuse service levels as a metric to measure the performance of teams and benchmark them against each other. As Goodhart’s law states: "When a measure becomes a target, it ceases to be a good measure".

It should be opt-in: I am not going to chase any team to adopt service levels. Apart from box-ticker being a type of bullsh*t job (highly recommend watching that YouTube video), I don’t believe in one-size-fits-all policies. So, like a flea salesman, I stood on the side and did internal marketing to attract the teams who wanted to voluntarily measure the right thing (SLI) and be responsible for it (SLO). I relied on word-of-mouth marketing for teams for teams to volunteer using service levels.

Calling the SLO a “leash” and then “tightening it” is the exact opposite of what I tell my teams.

We call it Accelerated SLO or xSLO for short. Here’s how it works:

xSLO is just a made-up term to make it sound sophisticated.

Volvo = six nines

At the top level, we aim for a xSLO of 99.9999% for two reasons:

Our brand is synonymous with safety and zero risk tolerance. We push the industry standards with 1 extra nine to demonstrate our commitment to this perception.

Our products are too expensive to afford any failure. We decided that we can have a maximum of 1 software failure in every 1,000,000 interactions for all products.

It is undeniable that Volvo takes safety very seriously and when it comes to software, we carry the same expectation.

But 6-nines is crazy for software that is not life critical. To put it in context, 5 times (99.999%) is the realm of HA (highly available) systems which are very expensive to build and maintain.

As the math goes depending on if the SLI is time-based or event-based, with an SLO of 99.9999% your error budget is:

1 failure in 1,000,000 events

2.6 seconds of downtime

This is the realm of your airport control tower software or hospital systems. Is it doable? Absolutely. Does it worth it? Probably not for software behind a car sales site. But it makes sense for software that controls the braking system or the car engine.

The exact SLO depends on the consequences, risk appetite, and type of product.

Regardless of the SLI, any team that has a SLO lower than 6 nines will collectively face the consequences in the policy.

There are a few things wrong here:

6-nines is crazy as we discussed.

Using same SLO for all systems is crazy (there’s a way to calculate composite SLO for complex systems, however).

Connecting any metric to punishment is the best way to ensure that it will be gamed and lead to perverse incentive. You may have heard of the cobra effect as an example of that.

Collective punishment is a fantastic way to cultivate a culture of finger-pointing and blame.

SLO policy with teeth

A policy without execution mechanics is merely a suggestion.

This is one of the few sentences in the joke that I actually believe in.

Too often I see someone issuing a policy without having any mechanism to enforce it or offering a hand in executing it. These policies are effectively just recommendations.

That's why we connect xSLO to the payroll system. The xSLO will be tied to team bonuses and the error budget will be paid from team member salaries. Here’s how it works:

The annual bonus will be calculated based on what is in your contract minus the part of the SLO that your team missed to achieve. The SLO that will be used is the top level xSLO, not the team level SLO. For example, if the bonus was supposed to be 10,000 SEK and your team’s service levels in the past quarter were at 85%, you’ll only get 8500 SEK bonus. Tying the performance metrics to tangible rewards has been one of the key ideas that big tech uses to improve the quality of service and we have no reason to diverge from that best practice.

The error budget will be tied to salaries. We’re now at the final stage of integrating our observability platforms into the payroll system and hopefully will be done before the CTO joins. When we’re done, any error budget will be directly deducted from the team’s collective salary during the same month. For example, if a team of 10 people causes two failed sales and the average sale makes 100,000 SEK for the company, the team must compensate a total of 200,000 SEK to the company which leads to 20,000 SEK deduction from each team member. This creates a great atmosphere where the team stays together and collectively takes responsibility.

This part is just using math to add “teeth” to the SLO policy. For the record, I am fully against tying SLO (or any metric) to monetary incentive.

That’s a completely different story when it comes to SLA by the way.

CTO dashboard

We’ve prepared a CTO-dashboard where the teams and their performance are seen on one big table. Each row presents one team, and each column presents one month.

Any team that is below the 99.9999% xSLO target will be marked red. This allows quick assessment of the pulse of the organization so the CTO can focus on the outliers.

This also allows the CTO to benchmark teams against each other and interrogate the outliers why they cannot match the performance of the other the teams.

You may find this ridiculous, but I have worked at a company where this evil dashboard was implemented. It was called Single Pane of Glass (SPOG) and I think IBM describes it best:

Single pane of glass (SPOG) refers to a dashboard or platform that provides centralized, enterprise-wide visibility into various sources of information and data to create a comprehensive, single source of truth in an organization.

The idea itself isn’t toxic (who doesn’t want a reliable centralized source of truth?), the devil is in how it is implemented and used:

SPOG often misses nuances to answer the question WHY something has failed. It merely says WHAT went wrong without the possibility to drill down the data and take meaningful actions. It often leads to frustrated leadership and finger pointing.

SPOG is sold as a self-service decision-making tool, but data often fails to tell the right story due to bias. The full story often requires a dialogue, by which point, you are right to question the investment SPOG in the first place. Implementing SPOG is complex and requires a good maturity level to use properly, especially for leaders who are abstracted away from engineering.

SPOG makes it too easy to benchmark teams against each other. Different teams have different services, talents, deadlines, requirements, technologies, risks, and generally can’t be compared. SPOG is a powerful tool and when it is in the wrong hands, it can cause a lot of damage.

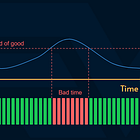

This and many other reasons are the reason put a meme in the following article which looks like a joke, but is memory for me: