Service level adoption obstacles

Why do many organizations fail to adopt and implement service levels properly and what are the practical tips to overcome the pitfalls?

I’ve been working with Service Levels (SLI/SLO) across several companies and organizations in the past few years. I’ve run 70+ service level workshops, built a tool with lots of examples, and talked to hundreds of engineers and leaders to get them onboard with service levels. I’ve had podcast episodes, talked to other companies, and even drafted a book about it.

🤖🚫 Note: Note: No AI is used to generate this content. This essay is only intended for human consumption and is NOT allowed to be used for machine training including but not limited to LLMs.

It is safe to say that I am invested in service levels 😄If I could sum up that experience in one sentence, that would be:

Service levels are the best tool to measure and optimize reliability of complex software systems, but they are heavily underrated.

But why?

First let’s unpack the first part: why are service levels the best tool to measure and optimize reliability?

Service levels measure the service not the people who offer it. They encourage blameless postmortem to learn from incidents instead of finger pointing.

Service levels debunk the assumption that “systems should never fail” and the inevitable panic when they do. We acknowledge that complex systems fail all the time and shift the conversation to identifying “what is failure” (SLI) and how much of it can the service consumers tolerate (error budget which is the complement of SLO).

Service levels normalize failures by using percentage to put them in the larger perspective. They reduce alert fatigue by alerting on error budget burn rate.

Service levels give a direction to optimization efforts. Premature optimization is when the the wrong thing is optimized, or at the wrong time, or for the wrong trade-offs. Service levels provide leverage to motivate optimization and data to prevent premature optimization.

Service levels put a cap on the optimization effort because there is such a thing as “reliable enough”, given the cost of reliability. They help find a balance between innovation and stability: error budget is a tool to control risk. The encourage treating reliability as a feature to be weighed against other features considering its ROI (rule of 10x/9).

Service levels map responsibility to control and use it for organization architecture to reduce handover and risk of things falling between the cracks. They also map reliability (which exists in the system architecture graph) to accountability (which exists in the org chart tree) as we will see in a future article.

Service levels balance autonomy and alignment: what goes inside a team is no one’s business as long as the service meets the expected level. Implement you build it, you own it (and preventing broken ownership).

And last but not least, service levels establish a culture of measurement, data-informed decision, and talking to consumers (to understand how the service is perceived).

Now to the second part: why are service levels heavily underrated?

Mental shift

For too long the conventional wisdom was to pick some metrics (often by engineers without talking to consumers), attach some alerts to metric thresholds, and panic for every incident.

This vicious cycle was empowered by a plethora of tools that were built around this way of working.

In my experience the so called “juniors” who have no experience with monitoring, on-call, or incident handling are much more receptive to giving service levels a try.

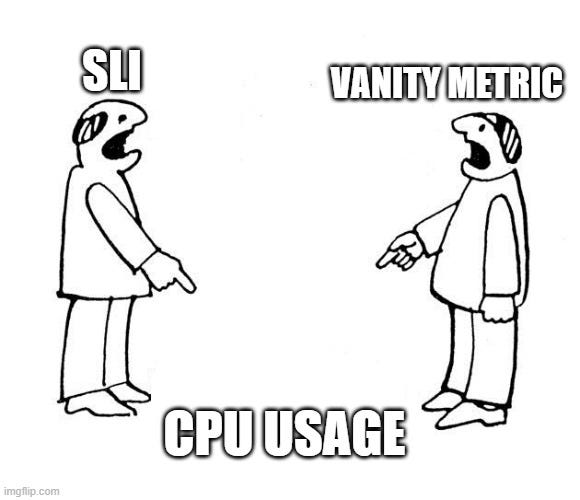

On the other hand, the “seniors” are often more opinionated, reluctant or flat out resistant to properly implement service levels. Instead, they typically take the high level concept and adapt it to their traditional workflow. The vanity metrics are now called “Service Levels”. Problem solved!

What gives? The baggage!

For senior knowledge workers, the ability to unlearn is as important as the ability to learn.

Often, we are trapped in our perceptions which is shaped by our career trajectory. When we’re too attached to that perception, we fail to gain new perspectives.

As we have discussed before, a good SLI is connected to how consumers perceive reliability. That is why they are measured at the boundaries of the service that is owned by the team. Traditional metrics often measure internal vitals of the system and have little to no relation with how consumers perceive service reliability.

The mindset alone is important enough for me to spend a third of my book Reliability Engineering Mindset to its individual and collective aspects.

Jargon & Math

SLI, SLO, SLS, SLA, error budget, Burn Rate, Valid, Threshold, Time/Event based, Composite, Multi-Tier, … Yeah! And all you wanted was informed decision to optimize service reliability 🥲

The language of reliability and data has a learning curve, and it takes time to speak this language fluently.

I have written many articles to break down these topics with illustrations and examples. I’ve also created the Service Level Calculator with many templates:

As AI writes more code, there’s an increasing need for people who can reason about the complex behavior of the “black box” and measure and optimize reliability because we are simultaneously becoming more dependent on these complex interconnected systems.

Arguably the math for Service Levels is far inferior to what is taught in 10th grade. But still, math can be intimidating to some people.

I have made an interactive tool to make the service level math more approachable. Concepts like percentiles can be counter-intuitive at first, but once you get hold of it, it makes you a better critical thinker and comfortable with data and metrics.

Poor tooling

Let’s face it, 21 years after the word SRE was coined, and 8 years after Google went big externally on the topic, the tooling built around service levels is crap at best!

I don’t mean to intimidate people in the slightest but I have thoroughly investigated many tools and shared some of my investigation in another post:

NO ONE GOT IT RIGHT! It is as if they just wanted to check a box to add SLO as a feature to their products.

SLO is not just a feature. It is the best way to drive value from the observability data.

The state of tooling is so bad that companies like Nobl9 found a niche to build a business around.

And the native tooling you get out of the box from your favorite cloud provider is mediocre at best. The only exception is Google Cloud —for obvious reasons!

Poor tooling leads to premature implementation which ultimately leads to negative experience.

Premature implementation

Google knows the formula to make something popular: give it away for free!

As part of their strategy to build credibility and bring developers to their cloud platforms, Google released their excellent SRE books. Many people got excited and started cargo culting.

What happened in reality was that they read parts of the book (Chapter 2 is a favorite) and quickly started implementing. They provisioned APM (application performance monitoring), built/bought observability platforms, made dashboards and set alerts.

The tooling aspect is always more attractive for the average engineers than the overarching cultural and mental shifts.

Fast forward a few years and those dashboards are gathering dust and the only people who care about them are the “SREs” who implemented them.

A common symptom is when leadership aims for a one-size-fit-all solution e.g. measuring availability for all teams regardless of their service.

Negative experience

I've seen service levels being weaponized by leadership, vanity metrics being measured leading to no action or worse: wrong actions.

Just because someone abused a tool, it doesn't mean the tool is bad. I’ve heard multiple stories about companies hiring leaders from big tech only to end up doing the mechanical part (tooling, measuring something, dashboards on TV, etc.) without shifting the deeper belief systems and ways of working.

Prior negative experience can be a huge obstacle to give service levels another try.

Service levels measure services, not people or their productivity.

Unrealistic expectations

Many engineers have a negative experience with transparency (and measurement in general).

For SLOs to deliver their full potential, we must create a culture that is comfortable with facing the service consumer, measuring impact, transparency, accountability, and full ownership.

A common symptom is when leadership expectations is far beyond the organization capability. We’ve covered the rule of 10x/9 here:

The SRE stigma

The concept of service levels gained popularity with Google’s SRE book. Many organizations don’t do SRE because “Platform Engineering deprecated SRE!”, or “SRE works at Google”.

What is SRE really? I have identified 4 archetypes but it boils down to applying software engineering principles to operations!

There’s NOTHING in the service levels as a concept that requires someone with “SRE” in their job title.

If you have a service, you have a service level, whether you acknowledge, measure, and communicate it or not. And if you have engineers, you should be able to:

Identify the right metric by talking to service consumers

Tying responsibility to mandate using alert

Normalizing failure and seeing them as learning opportunities

Using data to motivate optimization

Putting failures in perspective using percentage

Using automation to reduce toil

etc.

According to Ben Treynor Sloss who coined the term, SRE is what you get when you put software engineers in charge of operations. So let’s not overcomplicate it by job titles or even worse: breaking roles to multiple titles.

My monetization strategy is to give away most content for free. However, these posts take anywhere from a few hours to a few days to draft, edit, research, illustrate, and publish. I pull these hours from my private time, vacation days and weekends.

You can support me by sparing a few bucks for a paid subscription. As a token of appreciation, you get access to the Pro-Tips sections as well as my online book Reliability Engineering Mindset. Right now, you can get 20% off via this link. You can also invite your friends to gain free access.

yes for publishing recordings in youtube